In this article, we provide an introduction to serverless computing, serverless approach for your applications, key serverless technologies, and a brief discussion on limitations of the serverless approach.

In 1990s, Neal Ford (now at ThoughWorks) worked for a small company focused on a technology called Clipper. By writing an object-oriented framework based on Clipper, they built DOS applications using dBase. With their expertise in Clipper, they had a thriving training and consulting business. Then, all of a sudden the Clipper-based business disappeared with the sudden rise of Windows. With that, Ford and his team went scrambling to learn and adopt new technologies. “Ignore the march of technology at your peril” is the lesson that one can learn from this experience.

Many of us live inside technology bubbles: It is easy to get cozy and lose track of what is happening around us. All of a sudden, when the bubble bursts, we are left scrambling to find some new job or business. Hence it is important to stay relevant. In the 1990s that meant catching up with things like graphical user interfaces (GUIs), client/server technologies, and later the worldwide web. Today, relevance is all about being agile and leveraging cloud, machine learning, artificial intelligence, etc.

With this background, we delve into serverless computing, which is an emerging topic. In this article, we provide an introduction to serverless computing, serverless approach for your applications, key serverless technologies, and a brief discussion on limitations of the serverless approach.

Why serverless?

Most of us remember using server machines of one form or another. We remember logging remotely to server machines and working on them for hours. We had cute names for servers-like Bailey, Daisy, Charlie, Ginger and Teddy-and took care of them fondly. However, there were many problems in using physical servers like these:

- Companies had to do capacity planning and predict the future resource requirements

- Purchasing servers meant big capital expenses (CAPEX) for companies

- We had to follow lengthy procurement processes to purchase new servers

- We had to patch and maintain the servers

Cloud and virtualisation provided the level of flexibility that physical servers lacked. We didn’t have to follow lengthy procurement processes, who “owns the server,” why only that team has “exclusive access to that powerful server,” etc. Procurement processes for physical machines became obsolete with virtual machines (VMs) and cloud. The way we architected also changed. For example, instead of ‘scaling up’ by adding more CPUs or memory to physical servers, we started ‘scaling out’ by adding more machines as needed in the cloud. This model gave us the flexibility of operational expenses-based (OPEX-based) economics model. If any of the VMs went down, we got new VMs spawned in minutes. In short, we started treating servers as ‘cattle’ and not ‘pets.’

However, cloud and virtualisation also came with their own problems and still have many limitations. Users are still spending a lot of time managing them, for example, bringing VMs up and down based on their need. They have to architect for availability and fault-tolerance, size workloads, and manage capacity and utilisation. If they have dedicated VMs provisioned in the Cloud, they still have to pay for the reserved resources (even if it’s just idle time). Hence, moving from CAPEX to OPEX is not enough; what users need is to “really only pay what they are using” and “really pay as they go.” Serverless computing promises to address exactly this problem.

Another key aspect is agility. Businesses today need to be very agile. Technology complexity and infrastructure operations cannot be used as an excuse for not delivering value at scale. Ideally, much of the engineering effort should be focused on delivering functionality that delivers the desired experience, and not on monitoring and managing the infrastructure that supports the scale requirements. This is where serverless shines.

What is serverless?

Consider a chatbot for booking movie tickets-let’s call it MovieBot. Any user can query about movies, book tickets or cancel them in a conversational style; for example, “Is Dunkirk playing in Urvashi Theatre in Bangalore tonight?” in voice or text.

This solution requires three elements: a chat interface channel (like Skype or FaceBook Messenger), a Natural Language Processor (NLP) to understand the user intents (book a ticket, ticket availability, cancellation, etc), and then access to a backend where transactions and data pertaining to movies are stored. The chat interface channels are universal and can be used for different kinds of bots. NLP can be implemented using technologies like AWS Lex or IBM Watson. The question is: How is the backend served? Would you set up a dedicated server (or a cluster of servers) and an API gateway, deploy load balancers, put in place identity and access control mechanisms, etc? That’s costly and painful, right! That’s where serverless technology can help.

The solution is to set up some compute capacity to process data from a database and also execute this logic in a language of choice. For example, if you are using AWS platform, you can use DynamoDB for the backend, write programming logic as Lambda functions, expose them through AWS API gateway with a load balancer.

This entire setup does not require you to provide any infrastructure or have any knowledge about underlying servers/VMs in the cloud. You can use a database of your choice for the backend. You can choose any programming language supported in AWS Lambda, including Java, Python, JavaScript and C#. There is no cost involved if there are no users of the MovieBot. If a blockbuster like Bahubali is released, there could be a huge surge in users accessing the MovieBot at the same time, and the setup would effortlessly scale (you have to pay for the calls, though). Phew! You essentially engineered a serverless application.

Serverless architectures refer to applications that significantly depend on third-party services (known as backend-as-a-service or BaaS) or on custom code that’s run in ephemeral containers (function-as-a-service or FaaS).

1. Backend-as-a-service

Typically, databases (often NoSQL types) hold data and these could be accessed over the cloud. A service can be used to help access that backend. Such a backend service is referred to as BaaS.

2. Function-as-a-service

Code that processes requests (the programming logic written in your favourite programming language) could be run on containers that are spun and destroyed as needed. These are known as FaaS.

The word ‘serverless’ is misleading because it literally means there are ‘no servers.’ Actually, the word ‘serverless’ means “I don’t care what a server is.” In other words, serverless enables you to create applications without thinking about servers, that is, you can build and run applications or services without worrying about provisioning, managing or scaling the underlying infrastructure-just put your code in the cloud and run it! Keep in mind that this applies to platform-as-a-service (PaaS) as well. Although you may not deal with direct VMs with PaaS, you still have to deal with instance sizes and capacity.

Think of serverless as a piece of functionality to run; this functionality is not run in your machine but is executed remotely. Typically, serverless functions are executed through an event-driven fashion-as response to events or requests on HTTP, the functions get executed. In case of the MovieBot, Lambda functions are involved to serve user queries as and when users interact with it.

Use cases

With serverless approach, developers can deploy certain types of solutions at scale with cost-effectiveness. We have already discussed chatbots, which is a classic use case for serverless. Other key use cases for serverless approach are:

1. 3-tier web applications

Conventional Single Page Applications (SPA), which relied on REpresentative State Transfer (REST) based services to perform a given functionality, could be re-written to leverage serverless functions front-ended by an API gateway. This is a powerful pattern that helps your application scale indefinitely without concerns of configuring scale-out or infrastructure resources.

2. Scalable batch jobs

Batch jobs were traditionally run as daemons or background processes on dedicated VMs. More often than not, this approach hit scalability and had reliability issues-developers would leave their critical processes with single points of failure. With serverless approach, batch jobs can now be redesigned as a chain of mappers and reducers, each running as independent functions. Such mappers and reducers would share a common data store, something like a blob storage or a queue, and they can individually scale up to meet the data processing needs.

3. Stream processing

Related to scalable batch jobs is the pattern of ingesting and processing large streams of data for near-real-time processing. Streams from services like Kafka and Kinesis can be processed by serverless functions, which can be scaled seamlessly to reduce latency and increase throughput of the system. This pattern can effectively handle spiky loads as well.

4. Automation/event-driven processing

Perhaps the first application of serverless computing was automation. Functions could be written to respond to certain alerts or events. These could also be periodically scheduled to augment capabilities for the cloud service provider through extensibility.

Kind of applications that are best suited for serverless architectures include mobile back-ends, data processing systems (real-time and batch) and web applications. In general, serverless architecture is suitable for any distributed system that reacts to events or process workloads dynamically based on demand. For example, serverless is suitable for processing events from Internet of Things (IoT) devices, large datasets (in Big Data) and intelligent systems that respond to queries (chatbots).

Serverless technologies

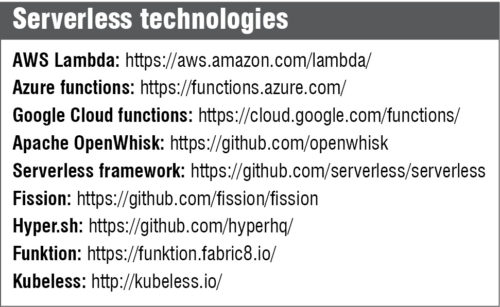

There are many proprietary and a few open source serverless technologies and platforms to choose from.

AWS Lambda is the earliest (announced in late 2014 and released in 2015) and the most popular serverless technology. Microsoft’s Azure Functions supports a wider variety of languages and integrates with the company’s Azure services. Google’s Cloud Functions is currently in beta stage. One of the key open source players in serverless technologies is Apache OpenWhisk backed by IBM and Adobe. It is often tedious to develop applications directly on AWS, Azure, Google and OpenWhisk platforms. Serverless Framework is a popular solution that aims to ease application development on these platforms.

Many solutions (especially open source) focus on abstracting away the details of container technologies like Docker and Kubernetes. Hyper.sh provides a container hosting service wherein you can use Docker images directly in serverless style. Kubeless from Bitnami, Fission from Platform9 and funktion from Fabric8 are serverless frameworks that provide an abstraction over Kubernetes.

Given that serverless is an emerging approach, technologies are still evolving. So you can see a lot of action in this space in years to come.

Challenges in going serverless

Despite the fact that a few large businesses are already powered entirely by serverless technologies, keep in mind that serverless is an emerging approach. There are many challenges to be dealt with when developing serverless solutions. Let us discuss key challenges in the context of MovieBot example discussed earlier:

Debugging

Unlike typical application development, there is no concept of a local environment for serverless functions. Even fundamental debugging operations like stepping-through, breakpoints, step-over and watch points are not available with serverless functions. As of now, users need to rely on extensive logging and instrumentation for debugging.

When MovieBot provides an inconsistent response or does not understand the user’s intent, debugging the MovieBot code (which is running remotely) requires users to log numerous details like NLP scores, dialogue responses and query results of the movie ticket database. Then they have to manually analyse and do detective work to find out what could have gone wrong.

State management

Although serverless is inherently stateless, real-world applications invariably have to deal with state. Orchestrating a set of serverless functions becomes a significant challenge when a common context has to be passed between them.

Any chatbot conversation represents a dialogue. It is important for the program to understand the entire conversation. For example, for the query “Is Dunkirk playing in Urvashi Theatre in Bangalore tonight?,” if the answer from MovieBot is ‘yes,’ the next query from the user could be “Are two tickets available?” If MovieBot confirms, the user could say “Okay, book it.”

For this transaction to work, MovieBot should remember the entire dialogue, which includes name of the movie, theatre location, city and number of tickets to book. This entire dialogue represents a sequence of stateless function calls. However, users need to persist this state for the final transaction to be successful. Maintaining this state external to functions is a tedious task.

Vendor lock-in

Although isolated functions are to be executed independently, users are in practice tied to the software development kit (SDK) and services provided by the serverless technology platform. This could result in vendor lock-in because it is difficult to migrate to other equivalent platforms.

Assume that you implement the MovieBot in AWS Lambda platform using Python. Though the core logic of the bot is written as Lambda functions, you need to use other related services from AWS platform-such as AWS Lex (for NLP), AWS API gateway and DynamoDB (for data persistence)-for the chatbot to work. Further, the bot code may need to make use of AWS SDK to consume services (such as S3 or DynamoDB), and that is written using boto3.

In other words, for the bot to be a reality, it needs to consume many services from the AWS platform rather than just the Lambda function code written in plain Python. This results in vendor lock-in because it is harder to migrate the bot to other platforms.

Other challenges

Each serverless function code typically would have third-party library dependencies. When deploying the serverless function, you need to deploy third-party dependency packages as well, which increases the deployment package size. Because containers are used underneath to execute serverless functions, the increased deployment size increases the latency to start-up and execute serverless functions. Further, maintaining all the dependent packages, versioning them, etc is a practical challenge as well.

Another challenge is serverless platforms’ lack of support for widely used languages. For instance, as of May 2017, you could write functions in C#, Node.js (4.3 and 6.10), Python (2.7 and 3.6) and Java 8 on AWS Lambda. How about other languages like Go, PHP, Ruby, Groovy, Rust or any other language of your choice? Though there are ways available to write serverless functions in these languages and execute them, it is harder to do so. Since serverless technologies are maturing with support for a wider number of languages, this challenge will gradually disappear with time.

Serverless: A game-changer

Serverless changes the way you look at how applications are composed, written, deployed and scaled. If you want significant agility in creating highly scalable applications while ensuring cost-effectiveness, serverless is what you need. Businesses across the world are already providing highly compelling solutions using serverless technologies. Serverless has a wide range of applications from chatbots to real-time stream processing from IoT devices. So it is not a question of ‘if,’ but ‘when’ you will adopt serverless approach for your business.