The Pokémon Go craze may have tapered off, but key takeaways remain: Users downloaded the mobile app more than 500 million times, and until the craze abated, hordes of fans flocked to malls, memorials, and even cemeteries trying to capture a rare virtual pocket monster or accrue points to progress in the game.

What can we learn here? That Augmented Reality (AR) engages users and enables them to see and do what they couldn’t before. The social game that blended physical and virtual worlds propelled the AR to the forefront of technologies that have the potential to transform industries. What’s more, we can draw on how various industries like medicine have applied AR to ease procedures and educate practitioners.

AR In Medical Applications

Vein Visualization

Venipuncture, the technique of puncturing a vein to draw blood or deliver an intravenous injection, is one of the most common medical procedures. Some patients, though, present extra challenges, including the elderly, burn victims, drug abusers, and patients undergoing chemotherapy. Of the three million procedures performed daily in the U.S., an estimated 30 percent require multiple attempts before finding a suitable vein.

Augmented Reality can help. Huntington, NY company AccuVein uses noninvasive Infrared (IR) technology to scan the target site and display the underlying vein structure. Because the hemoglobin in blood absorbs more red light than the surrounding tissue, the resulting image (Figure 1) shows the veins as a web of black lines on a background of red.

Figure 1: The rechargeable AV400 AR scanner weighs less than 10 ounces and displays the veins underneath the skin. (Image source: AccuVein)

AR vein illumination can increase the first-stick success rate by up to 3.5 times, which leads to increased patient satisfaction, reduced pain, reduced workload, and reduced cost. In a surgical application, vein illumination can help the surgeon to identify the optimal incision site, which reduces bleeding and lowers costs.

Surgical Navigation

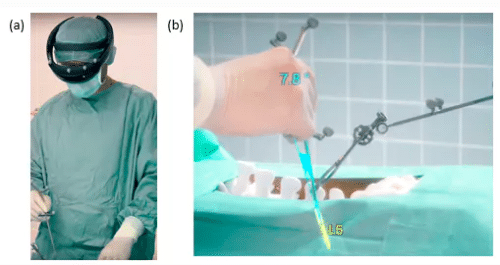

For surgeons, AR offers a hands-free and seamless way to access digital information while performing a delicate operation. German technology supplier Scopis has just introduced an application that combines Microsoft’s HoloLens head-mounted display with a surgical navigation system to help surgeons performing spine surgery (Figure 2). The platform provides a hands-free display and a holographic overlay that indicates exactly where the surgeon should operate.

Figure 2: The Scopis surgical navigation tool: (a) Surgeon headset; (b) AR image. (Source: Scopis)

The next stage of development will be to combine data from multiple sources such as MRI (Magnetic Resonance Imaging) or PET-CT (Positron Emission Tomography-Computed Tomography) into a fused AR image that can provide the surgeon with customized information for each procedure.

Medical AR Applications In Development

Medical Education

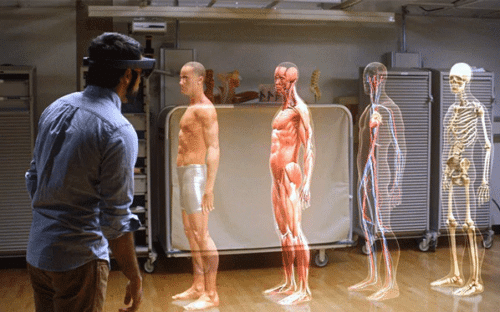

Beginning in 2019, Case Western Reserve University will be teaching anatomy to future doctors without the use of cadavers. Instead, medical students will use head-mounted displays to view an AR representation of a human body (Figure 3).

Figure 3: An AR headset enables anatomy students to examine a virtual human body and navigate through successive layers of skin, muscle and organs. (Source: Microsoft)

The technology adds a vital element missing from earlier attempts to teach the subject using large touch screens. Users can now walk around a 3-D image of the body skeleton, organs, and veins and view the display from any orientation.

The next stage of development will allow users to interact with the image in real time—rotating the body or “moving” an organ to examine the underlying arteries, for example.

Enabling Vision

Oxford (UK) start-up Oxsight is testing AR glasses to help visually-impaired patients recognize objects and move around their environment. The smart glasses detect light, movement, and shapes, and then display sensor data in a way that helps the user make the most of his or her remaining vision. Each person’s needs are different, so the display can be adjusted to customize the view. For example, the display can project a cardboard cutout of a person’s appearance, boost certain colors, or zoom in or out.

These are only a couple of examples. Many other medical AR applications are in the “proof-of-concept” stage, including live-streaming of patient visits with remote transcription services; remote consultation during surgical procedures; and assistive learning for children with autism.

Building Blocks For AR Systems

What are the building blocks for AR design? Many AR applications are still on the drawing board, but existing wearable and portable medical devices already incorporate many of the core hardware technologies, with Microchip Technology at the forefront. The block diagram for Microchip’s wearable home health monitor design (Figure 4), for example, includes a powerful processor with analog functions, sensor fusion capability, low power operation, and cloud connectivity.

Figure 4: A high-end wearable home health monitor includes many of the blocks needs for an AR application. (Source: Microchip Technology)

Similarly, the XLP (eXtreme Low Power) family of PIC microcontrollers is designed to maximize battery life in wearable and portable applications. XLP devices feature low-power sleep modes with current consumption down to 9nA and a wide choice of peripherals. The PIC32MK1024GPD064, for example, is a mixed-signal 32-bit machine that runs at 120MHz, with a double-precision floating-point unit and 1MB of program memory. Signal conditioning peripheral blocks include four operational amplifiers (op-amps), 26 channels of 12-bit Analog-to-Digital Conversion (ADC), three Digital-to-Analog Converters (DACs), and numerous connectivity options.

Microchip also offers a sensor fusion hub, as well as several wireless connectivity options including Bluetooth and Wi-Fi modules. Combined with third-party optics and other blocks, these components can form the basis of a low-cost AR solution.

Finally, The Microsoft HoloLens core combines a 32-bit processor, a sensor fusion processor, and a high-definition optical projection system. Other key components include wireless connectivity, a camera and audio interface, power management, and cloud-based data analytics.

Conclusion

AR technologies have already demonstrated their value in medical applications and promise to bring big changes over the next few years to both the clinic and the operating room. Although the optics add a new dimension, many of the hardware building blocks have already been proven in high-volume wearable and portable products.