Infineon Technologies AG have discussed how they are addressing the essential requirements for enabling greener data centers in an online media brief.

Infineon Technologies AG recently held an online media briefing on the advancements in AI on June 4, 2024. During the briefing, Athar Zaidi, Senior Vice President and Business Line Head of Power ICs and Connectivity Systems at Infineon, discussed the transformative nature of AI technology, highlighting how the computing power needed to train cutting-edge AI models is doubling every 3.4 months. Zaidi also mentioned the rapid growth of chatGPT, noting that it reached 100 million users in just 5 days. Furthermore, he shared that 77% of the global population is currently using AI technology.

The rapid expansion of global data and the rising electricity requirements of AI-enabled systems have created a demand for energy-efficient solutions. Today, we are witnessing the emergence of increasingly power-hungry processors, leading to a continuous increase in the electricity demand of individual processors over time. Addressing this escalating demand for power presents a significant challenge.

The rise of AI technology has significantly increased the demand for energy efficient solutions in data centers. Currently, data centers account for 2% of global electricity consumption, a figure expected to reach 7% by the end of the decade, equivalent to the energy usage of India. Zaidi emphasized the significant impact of Generative AI on electricity demand, citing over 1000 MWh of energy required for GPT-3 training. It’s clear that addressing power management as a multi-dimensional challenge is crucial, especially given the concerns surrounding the escalating energy requirements associated with newer chip technologies. As we strive to enhance computing capabilities, it’s equally important to prioritize efficient power consumption to alleviate the strain on the grid.

Infineon says it can tackle several critical challenges by focusing on enhancing the efficiency of powering AI data centers. The company claims to solve the problem in multiple dimensions including:

- Drain on the grid. It is projected that data centers will account for 7% of global final electricity demand by 2030. This could pose a significant challenge, particularly for major data center hubs like the US.

- Carbon footprint. The operation of AI servers is energy-intensive and contributes significantly to the carbon footprint.

- Water consumption. Approximately half of the energy consumed by data centers is dedicated to cooling. The most prevalent cooling systems rely on chilled water or traditional air conditioning.

- E-waste. E-waste from AI servers contains hazardous chemicals such as lead and cadmium, which can pose a threat to the environment.

Zaidi highlights the commitment to powering Al from the grid to the core. The average Infineon BOM per Al server ranges from 850 to 1800 USD. Each server rack accommodates 4 Al servers. Our focus is on driving innovation to enhance the way we power Al, including rearchitecting power from the grid to the core through 48V systems and vertical power delivery. Additionally, we are dedicated to designing efficient power supplies based on both Silicon and wide-bandgap technology. Leveraging advanced packaging for density and cooling, along with enabling smart control and software, are also key aspects of our approach to powering Al.

The product level implications are significant. Infineon power modules are integrated into the Al accelerator card to power the XPU, resulting in vertical power delivery that enhances customer benefits. This integration allows for increased power density in a smaller form factor, enabling a further boost in compute power. Moreover, it leads to a reduction in power losses by over 7MW for an average data center with 100,000 CPU nodes. This translates to over 12% total cost of ownership savings compared to lateral power delivery networks.

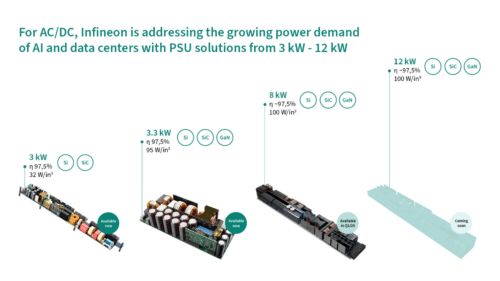

Zaidi shared that Infineon is making significant strides in addressing the increasing power demand of Al with their AC/DC Power Supply Unit (PSU) solutions, which range from 3 kW to 12 kW. Al not only promises to be a major revenue driver for Infineon’s server business but also serves as a crucial lever for driving decarbonization. Zaidi emphasized our clear potential for differentiation and highlighted our commitment to system innovation through partnerships with leading companies. With our industry-leading expertise in system innovation, we aim to achieve best-in-class efficiency and the lowest cost of ownership by FY24. Furthermore, we anticipate Al server revenue to reach a low triple-digit million amount, with the potential to save 22 million metric tons of CO2 by utilizing Infineon products in Al servers.

Zaidi has unveiled 8mm and 5mm modules, which were initially announced in April, and they are currently in the production phase. He encapsulates the brief by stating, “We are empowering a greener AI, shaping the future with our solutions.” AI represents the next technological revolution and is characterized by a remarkable pace of adoption. However, behind the brilliance of AI lies an energy-intensive process with a substantial carbon footprint.