Researchers mimic sleep patterns of the human brain in artificial neural networks and record a boost in their utility.

Human body needs 7-13 hours of sleep based on their age. Many biological processes happen during sleep: The brain stores new information and gets rid of toxic waste. Nerve cells communicate and reorganize, which supports healthy brain function. The body repairs cells, restores energy, and releases molecules like hormones and proteins.

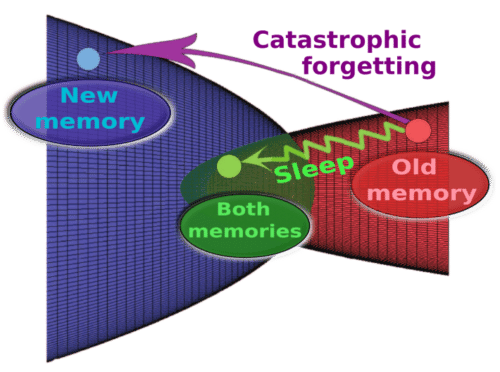

Artificial neural networks leverage the architecture of the human brain to improve numerous technologies and systems, from basic science and medicine to finance and social media. In some ways, they have achieved superhuman performance, such as computational speed, but unlike the human brain the artificial neural network overwrites previous information as it learns something new, this is called catastrophic forgetting.

But this recent study shows how biological models may help mitigate the threat of catastrophic forgetting in artificial neural networks, boosting their utility across a spectrum of research interests.

The scientists used spiking neural networks that artificially mimic natural neural systems: Instead of information being communicated continuously, it is transmitted as discrete events (spikes) at certain time points.

Results showed that when these spiking networks were trained on a new task, and were provided with occasional off-line periods that mimicked sleep, catastrophic forgetting was mitigated. Like the human brain, “sleep” for the networks allowed them to replay old memories without explicitly using old training data.

“When we learn new information,” said Bazhenov, “neurons fire in specific order and this increases synapses between them. During sleep, the spiking patterns learned during our awake state are repeated spontaneously. It’s called reactivation or replay.

The capacity of synapses to be altered or molded–synaptic plasticity, is still in place during sleep and it can further enhance synaptic weight patterns that represent the memory, helping to prevent forgetting or to enable transfer of knowledge from old to new tasks.”