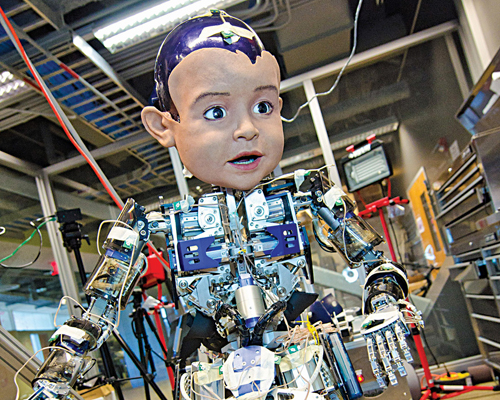

Shape-shifting robots, 3D printed ones, those with facial recognition and social skills too are evolving in robotics labs across the world, but there is something more interesting brewing! Scientists are now working to develop robots that can learn to do things and react to situations like humans do. Just as a baby learns to be and do, by observing, assimilating, experimenting and adapting, robots of the future can be taught, or will even learn naturally like we do, without being programmed to do everything. Here, we look at some research and early examples.

Hi Jimmy!

(Courtesy: Intel)

You need to 3D print some parts, fix in other non-printable parts including an Intel Edison processor, and voila, your robot buddy is ready! And, according to Intel, you do not need a PhD to program Jimmy, their open source robot, which will be on sale by end of this year.

When first launched, Jimmy walked up on stage, introduced himself, danced and then sat down to watch the rest of the proceedings. A short trip to Intel’s website and you can see Jimmy solving the Rubik’s cube, cheering for his favourite team at a match, and making a public appearance with Intel CEO. Once you make your own Jimmy (or whatever you call it), it can send tweets on your behalf, sing or dance with you, bring you food, and more.

Led by Brian David Johnson, Intel’s robotics lab ultimately aims to build an easy-to-make and easy-to-use, completely open source robot that is extremely social, friendly, and able to dream, feel and think. While it seems awesome and impossible that you can make your own robot and also make it do whatever you want, Johnson explains that it is just akin to a smartphone with customisable apps.

The company is also betting big on the open source model, because when you have a smart and social robot like Jimmy, it needs to constantly evolve, and what better way to do it than have the whole world contribute apps and ideas?

Please, Monica…

Do you remember walking into your dad’s office, to be greeted by his personal assistant, who politely seated you, offered your favourite beverage and book, chatted friendlily and then subtly broke the news that your father was out of office for a meeting? Do you remember her trying to step in and solve small problems when dad was overworked, booking tickets, paying bills and helping out with professional and personal tasks that could be shared? Where did they go? Why aren’t today’s smartphones and laptops as efficient as those reliable personal secretaries in reducing your stress? Well, perhaps it is because these inanimate objects cannot think, feel or even actually realise how tired or stressed you are!

You would not feel this way if you had a couple of digital assistants like Eric Horvitz’s. Horvitz, managing director of Microsoft Research Redmond, is deeply involved in the Situational Interaction project to enable many forms of complex, layered interaction between machines and humans.

People who visit Horvitz at his Redmond office are often pleasantly surprised by the practical results of the research. When you get to his office floor, a cute little robot would greet you, give you directions with proper hand movements, and let Horvitz’s virtual assistant Monica know that you have arrived. Monica, an onscreen personality, greets you in her unmistakable British accent. In the background, she does a quick calculation about the costs and benefits of an unplanned interruption, taking into consideration Horvitz’s current desktop activity, calendar and past behaviour. When she is convinced that a short interruption is okay, she lets you in. Monica is smarter than this; she can access Horvitz’s online calendar, detect his presence in office, predict when he will finish a task, infer how busy he is, when he will return to office after a meeting and even when he will read and reply to emails, and even predict, quite well, when he will conclude a phone conversation based on her ‘observation’ of his past behaviour!

Machines that are part of this project have situational awareness and can take into account the physical aspects of an interaction including people’s gait, gestures, etc, which helps understand the tone of an interaction better. The project integrates machine vision, natural language processing, machine learning, automated planning, speech recognition, acoustical analysis, sociolinguistics, etc, to help robots and computers better understand multiparty interaction. The team has tried to endow systems with short-term and long-term memory so they can use past experiences also to decide how to react in a particular situation. In a Microsoft Research article, Horvitz comments that, “Interactions with the system, especially when we integrate new competencies, seem magical, with the magic emerging from a rich symphony of perception, reasoning and decision-making that’s running all the time.”

Help me, haathi

(Courtesy: Microsoft Research)

An elephant is one of the most intelligent creatures on our planet, and undoubtedly its trunk is one of nature’s smartest creations. It features tens of thousands of muscles working in tandem to deftly break a small nut as beautifully as it can uproot a large tree! This versatility of the elephant’s trunk has long inspired robotics scientists, and ultimately a few months ago, scientists of German engineering firm Festo managed to develop a bionic elephant trunk that can learn and work just like an elephant’s.

The trunk is made of 3D-printed segments and is controlled by a system of pneumatic muscles. Instead of using regular precision control software, the team has used a method called ‘goal babbling’ or trial-and-error learning, inspired by how infants learn to use their muscles. When the robot works, it records the tiny pressure adjustments made in the pneumatic tubes operating the artificial muscles. It then creates a map that relays the trunk’s exact position, to calibrate the pressure in each tube.

The trunk can be trained by manually manipulating it into required positions. While it might resist the movement at first, the trunk slowly yields and follows the movement. It then ‘learns’ it. So, the next time you start pushing it to the same position, it moves easily and naturally. They call this ability of the trunk to remember its past movements as muscle memory.

The company hopes that this muscle memory together with the trunk’s dexterity will enable its use in industrial environments and for jobs like changing road lights, picking apples, etc.

To the rescue, MacGyver

Inspired by the famous fictional secret agent Angus MacGyver, known for his troubleshooting skills, Georgia Tech submitted a research paper titled Robots Using Environment Objects as Tools: The ‘MacGyver’ Paradigm for Mobile Manipulation at the IEEE International Conference on Robotics and Automation (ICRA 2014). The MacGyver robots proposed in the paper stand out from the current generation in their skill to make use of environmental objects to solve problems, rather than be intimidated by unpredictable environments.

In a demonstration of the concept, the team designed a complete rescue scenario with a 100kg brick object blocking entry to a room and another 100kg loaded cart. Here is how Georgia Tech’s Golem Krang robot used the myriad stuff around it to complete the task. They noted, “Interestingly, the loaded cart becomes a fulcrum for an arbitrary board to topple the bricks. Then the bricks, which were initially an obstacle, are used as a fulcrum for a lever to pry the door open. Finally, the robot uses a wider board to create a bridge and perform the simulated rescue.”

Another team from Georgia Tech presented a second paper at the same conference detailing how a humanoid robot can be taught to traverse a gap by finding and using an object in its environment. This team achieves such behaviour using a concept that they call EnvironmenT Aware Planning (ETAP), which lets the robot evaluate and use resources in its environment to assist its locomotion capabilities, to achieve its goal.

In the demonstration, an HRP-2 robot was made to cross a rather large gap. The robot, guided by an ETAP-based planning system, picked up a board in its environment and dropped it across the gap, resting on the two platforms on either side. To do this, it evaluated several boards and picked one that was larger than the gap to be crossed.

These researches show that it is possible for robots of the future to understand their environment and react to it intelligently, enabling fully autonomous movement.

Teach me, crowd!

We have been speaking of robots that are aware of their environment and learn from it. But, that would mean their knowledge is only as good as what they see around them, and the people whom they learn from. If the behaviour or methods around them are flawed, their learning would be flawed too.

For example, if a robot is assigned the task of making something using building blocks, its construction would be only as good as the explanation or design it learns from the people around it, which may or may not be the best way of building the object. Instead, if a service like Amazon’s Mechanical Turk could be used to crowd-source designs for building the required object, and the robot could use machine learning to analyse these and choose the best way?