This article talks about the use of machine learning and deep learning models on handheld devices like mobile phones, Raspberry Pi, and microcontrollers.

Mobile devices are now an important part of our lives. Empowering them with machine learning (ML) models helps in creating more creative and real-time applications. TensorFlow Lite is a framework developed by Google that is open source, product-ready, and cross-platform. It is used to build and train ML and deep learning (DL) models on devices like mobile, embedded, and IoT devices.

TensorFlow is a specially designed framework that converts pre-trained models residing in TensorFlow to a specific format that is suitable for hand-held devices by considering speed and storage optimisation needs. This format is found to work well for mobile devices with Android, iOS, or Linux based embedded devices like microcontrollers or Raspberry Pi.

Deployment of the pre-trained model on mobile devices makes it convenient to use them at any point of time.

Interfacing your model with your mobile device

Let us look at the features of ML and DL models that are important for interfacing with mobile devices.

- Low latency. Deploying DL models on mobile devices makes it convenient to use irrespective of network connectivity, as the deployed model with suitable interface eliminates a round-trip to the server and makes access of the functionality faster.

- Light weight. Mobile devices have limited memory and computation power. Hence, the DL model deployed on a mobile device should be in smaller binary size and light-weight.

- Safety. The interface made for the deployed model on the mobile device is accessible within the device only and it shares no data outside the device or network, eliminating data leak issues.

- Limited power consumption. Connection to external network demands maximum power on the mobile device. However, models deployed on the hand-held device do not demand network connection, resulting in low power consumption.

- Pre-trained model. The pre-trained model can be trained on high-computing infrastructure like graphics processing unit (GPU) or on cloud infrastructure for several tasks like object detection, image classification, natural language processing, etc. The model can then be converted and deployed for mobile devices on different platforms.

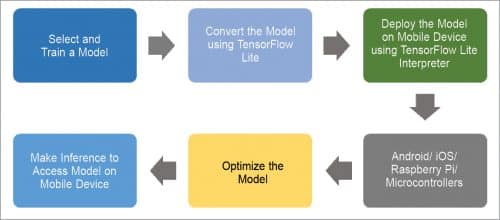

Fig. 1 illustrates the workflow of TensorFlow Lite.

Model selection and training

Model selection and training is a crucial task for classification problems. There are several options available that can be used for this purpose.

- Build and train a custom model using application-specific dataset

- Apply a pre-trained model like ResNet, MobileNet, InceptionNet, or NASNetLarge, which are already trained on ImageNet dataset

- Train the model using transfer learning for the desired classification problem

Model conversion using TensorFlow Lite

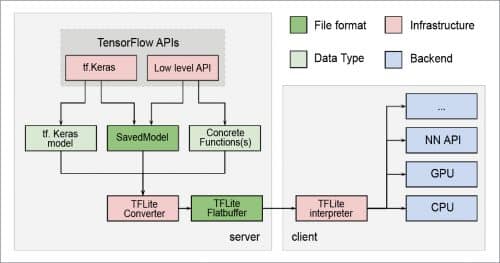

Trained models can be converted to the TensorFlow Lite version. TensorFlow Lite is a special model format which is light-weight, accurate, and suitable for mobile and embedded devices. Fig. 2 shows the TensorFlow Lite conversion process.

Using TensorFlow converter, the TensorFlow model is converted to a flat buffer file called ‘.tflite’ file. This .tflite file is what is deployed to the mobile or embedded device.

The trained model obtained after the training process is required to be saved. The saved model is an architecture that stores different information related to weights, biases, and training configuration in a single file. Thus, sharing and deploying of the model becomes easy with the saved model.

The following code is used to convert the saved model to TensorFlow Lite.

#save the model after compiling

model.save(‘test_keras_model.h5’)

keras_model=tf.keras.models.load_model

(‘ test_keras_model.h5’)

#conver the tf.keras model to TensorFlow

Lite model

converter=tf.lite.TFLiteConverter.from_

keras_model(keras_model)

tflite_model=converter.convert()

Model optimisation

An optimised model demands less space and resources on hand-held devices like mobile and embedded devices. Therefore, TensorFlow Lite uses two special approaches—Quantisation and Weight Pruning—to achieve model optimisation.

Quantisation makes the model light-weight; it refers to the process of reducing the precision of the numbers which are used to represent the different parameters of the TensorFlow model. Weight pruning is the process of trimming the parameters in the model which have less impact on the overall model performance.

Make inference to access models on mobile devices

The TensorFlow Lite model can be deployed on many types of hand-held devices like Raspberry Pi, Android, iOS, or microcontrollers. Following the below steps can ensure a mobile interface that allows you to access the ML/DL model easily and accurately.

- With the model, initialise and load interpreter

- Assign the tensors and obtain input and output tensors

- Read and pre-process the image by loading it into a tensor

- Use and invoke an interpreter that makes the inference on the input tensor

- Generate the result of the image by mapping the result from the inference

This article was first published in March 2021 issue of Open Source For You

Nilay Ganatra (left) is assistant professor and Dr Atul Patel (right) is Dean and Professor, Faculty of Computer Science and Applications, CHARUSAT, Changa, Gujarat, India