If machines could recognise objects the way human beings do, it would be quite interesting. Object detection is a trending topic nowadays. So let us make an object detection camera that can do live classification of objects and people, and every activity is displayed live on web using an IP address.

If machines could recognise objects the way human beings do, it would be quite interesting. Object detection is a trending topic nowadays. So let us make an object detection camera that can do live classification of objects and people, and every activity is displayed live on web using an IP address.

| Bill of material | |

| 1 | Raspberry Pi 3 B-1 |

| 2 | USB camera/Raspberry Pi camera-1 |

| 3 | Keyboard-1 |

| 4 | Monitor-1 |

| 5 | Mouse-1 |

| 6 | SD Adaptor (32GB) |

| 7 | HDMI to VGA cable |

| 8 | 5V power adaptor with USB Type-C connector |

| 9 | SD card reader |

We will use Edge Impulse to train an ML model and integrate it with a computing machine (Raspberry Pi) and acquire live video and images through camera interfacing. The project will be able to work with the internet and without the intranet. Using the internet, we can use the project for activities like:

- Live door monitoring to alert when an unknown person enters

- In industry to do object classification and separation using robotic arms

- Counting of fruits on a tree or in a separator

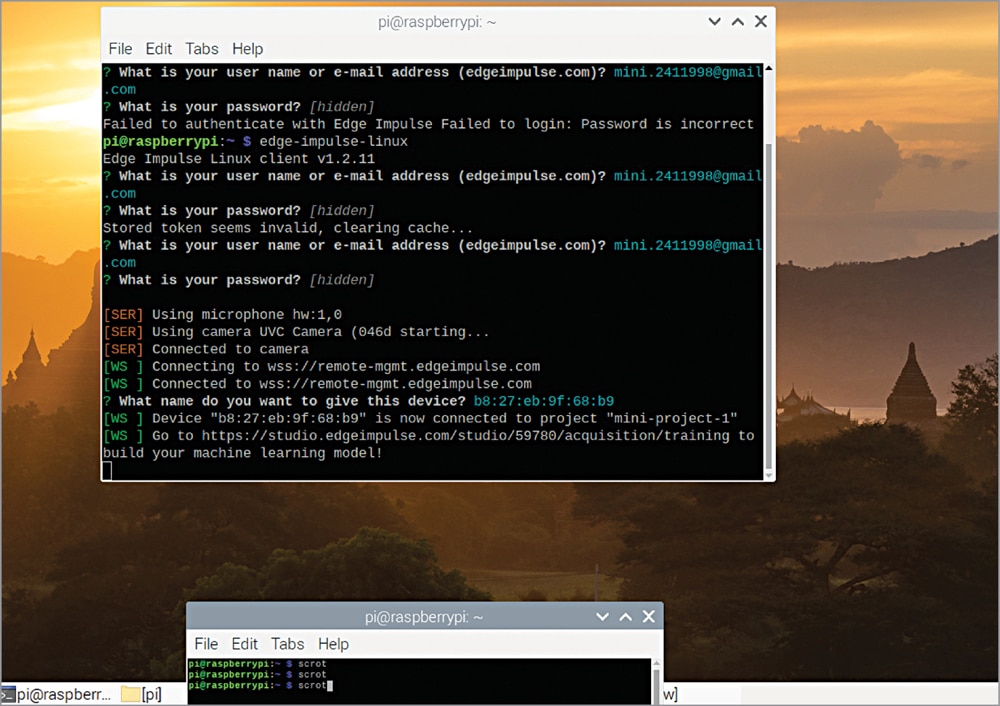

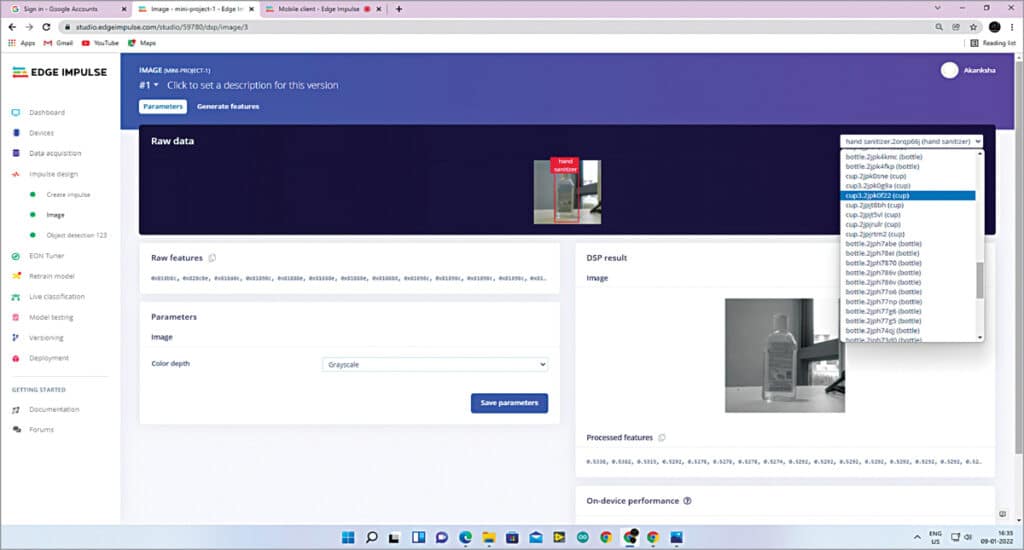

Fig. 2: Running Edge Impulse engine

There are various versions and flavours of OS available for Raspberry Pi but we need to prepare the SD card with the latest OS. To load the OS on SD card, follow the steps mentioned below:

- Download Raspberry Pi Desktop Imager on a PC.

- Launch the Raspberry Pi Imager

- Choose OS as Raspberry Pi OS (32-bit)

- Choose SD card

- Select Write

- Insert SD card into Raspberry Pi

- Connect Raspberry Pi to power, keyboard, mouse, and monitor

- If OS is properly installed, you will see “Welcome to Raspberry Pi Desktop” message.

Now, with the OS ready, connect the USB camera or Raspberry Pi camera. If Raspberry Pi camera is used (connected with a ribbon cable), first enable the camera interface in Raspberry Pi config setting. Then install Edge Impulse on Raspberry Pi by running the following commands on terminal:

- curl -sL https://deb.nodesource.com/setup_12.x | sudo bash –

- sudo apt install -y gcc g++ make build-essential nodejs sox gstreamer1.0-tools gstreamer1.0-plugins-good gstreamer1.0-plugins-base gstreamer1.0-plugins-base-apps

- sudo npm install edge-impulse-linux -g –unsafe-perm

After setting up Edge Impulse, create and train the ML model. So, open the Edge Impulse website and then enter your name and email id and sign up. Create a new project in Edge Impule, open Linux terminal and run the command edge-impulse-linux

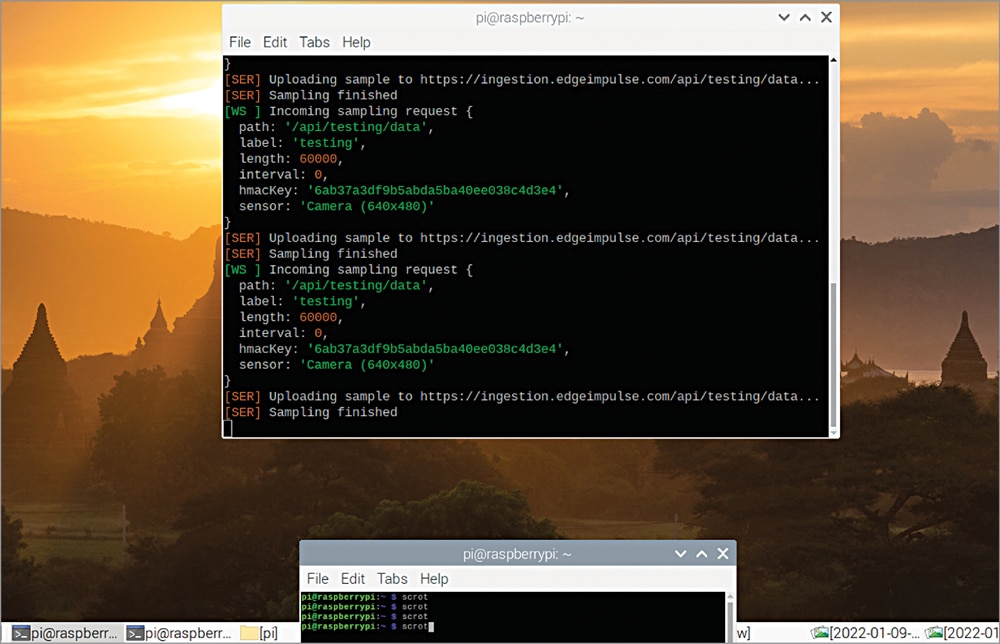

When you select the project, your device will try to connect with Edge Impulse. If the connection is proper, the device detection of the Edge Impulse Raspberry Pi cam will be shown, and you will get a link. Open the link in a web browser and get the data acquisition interface to take a photo or any other data for ML mode. Photos can be taken of objects like a bottle or cup, and any other item (including human beings) for the ML model.

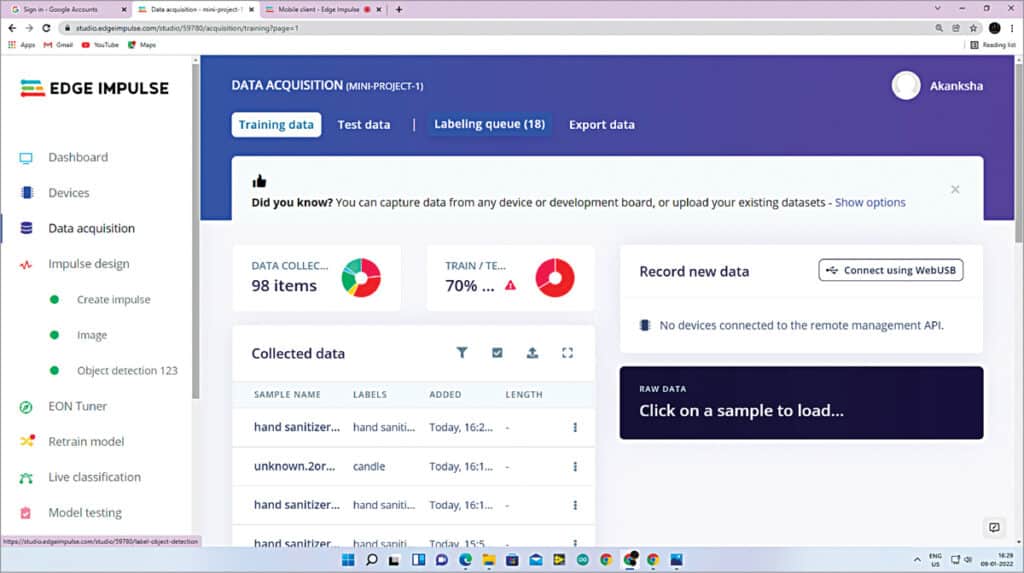

For data acquisition, take at least 100 photos of the different objects that you want to classify and use for training and testing the ML model. To rebalance the data, the ratio of splitting should be 70:30.

Go to dashboard, where the Labeling Method should be bounding boxes (object detection). Label all the objects and see this in Labeling Queue. Then go to Impulse Design, where the image width and image height should be 320*320. You may change the object detection project’s name and then click on Save Impulse.

In the Image section, configure the processing block and select raw data on top of the screen. Now save the parameters either in RGB or Grayscale.

Due to the different dimensions of the images, dimension reduction takes place.

In the Object Detection section, the number of training cycles and learning rate can be set. In the author’s prototype, the number of training cycles was set at 25 and the learning rate at 0.015.

You can now start training the model. After training the model you can get a precision score. For validating your model, go to Model Testing and select Classify All. Then go to live classification.

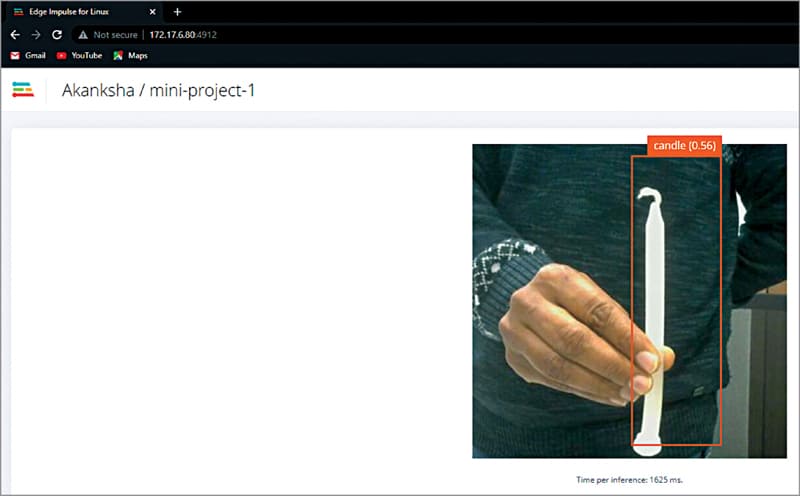

Put an object in front of the camera and the video of object can be seen in Live Classification section of Edge Impulse without any label of the classified and detected object, such as the bottle, cup, or a person. If you want to see it with IP address, run the command edge-impulse-linux-runner

This command builds and downloads the model in Raspberry Pi and gives an IP address to see live classification. When you open this IP address in browser you can see the output http://192.168.1.19:4912

Also, check this AI-powered real-time setup, which detects objects ahead and estimates their distances from the vehicle.

Akanksha Gupta is M.Tech in Electronics and Communication from NIT Jalandhar. She is a Research Scholar in the Electrical department of IIT Patna. She is interested in designing real-time embedded systems and biomedical signal processing

Sagar Raj is Founder and Director at Shoolin Labs, Jaipur (Rajasthan). He is Founder and Director at Lifegraph Biomedical Instrumentation, Buxar, and IoT Trainer at Lifegraph Academy, Incubation Centre, IIT Patna