Communicating with a specially challenged person who can’t speak or hear is quite difficult, especially when you don’t know sign language.

Communicating with a specially challenged person who can’t speak or hear is quite difficult, especially when you don’t know sign language.

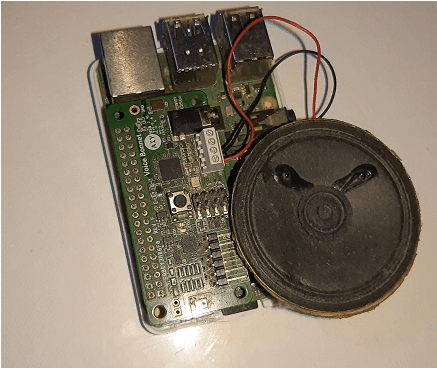

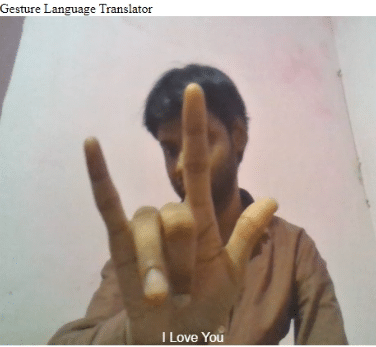

So to ease this problem, today you will learn to make a sign Language Translator device that converts sign language into a spoken language. This device will be based on an ML model that can recognize the different sign language gestures for accurate translation.

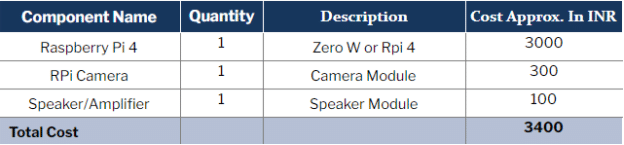

Bill of Material

Preparing The ML Model

There are different sign languages practiced in different countries. For example, India uses Indian Sign Language (ISL) while the USA uses American sign language (ASL). So, you first need to decide which type you wish to implement.

To obtain the datasets of the ASL, visit here. For ISL, visit here. You can search here for numerous hand gestures that are used in daily life.

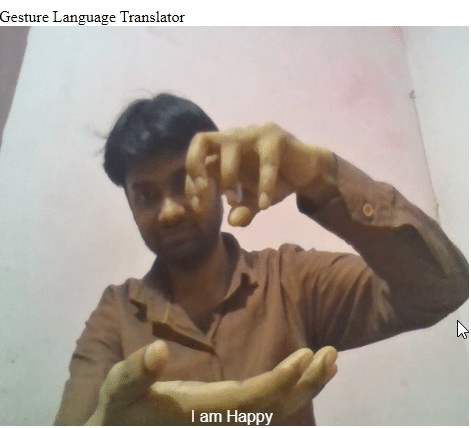

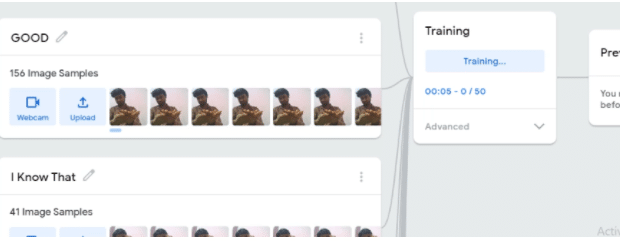

After gathering the datasets, prepare the ML model for training. There are many options for this such as TensorFlow, Keras, PyTorch, Google’s Teachable Machine, etc. For this project, I am using Google’s Teachable Machine, which is an online ML model creator service. Now, feed the datasets into your choice of ML model creator and capture the pictures of different hand gestures/signs with a camera. Remember to label them as per their meaning.

Deploying ML Model

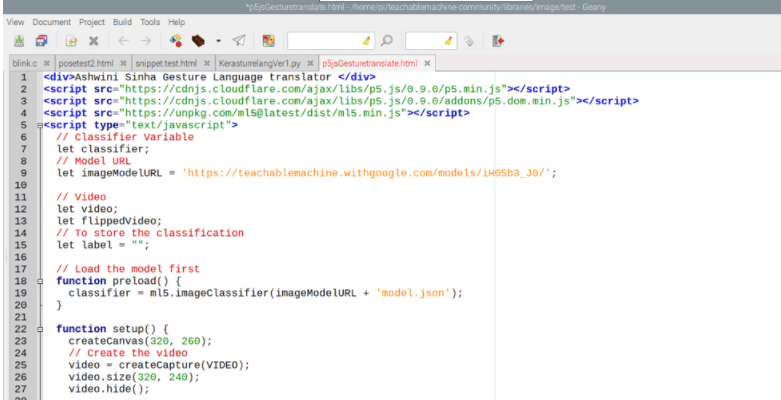

After carrying out the above steps, download the ML model output. Or you can get the option for uploading the ML model. So upload the code and you will get the link to the ML model and a code snippet to be used in the ML model, which can be used for various platforms like Python using Keras P5.js or JavaScript (JS). After uploading the ML model, copy the JS code script.

DIY Gesture Language Translator – Coding

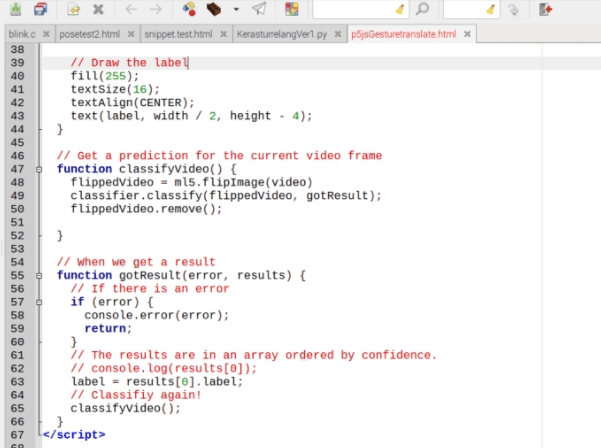

Open the Raspberry Pi desktop and then create a new JS file. Shown below is Gesturetranslator.html. Paste the code snippet into the code and write the project name. Increase the canvas size so that the camera feed is clearly visible in a larger space. Save it.

Recommended: Animal Language Translator

Testing

Enable the camera interface in Raspberry Pi and plug it into the board. Now open the JavaScript code in Google Chrome or any other browser that supports it.

Also Read: Implementing gesture control in Projects