The first event-based vision sensor designed for ultra-low-power Edge AI devices, revolutionizing AR/VR, security, IoT, and more with its innovative capabilities.

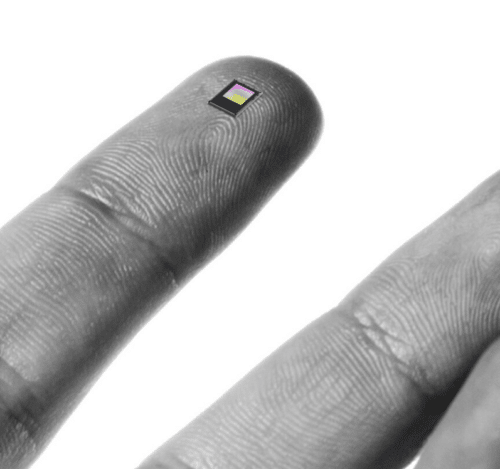

Prophesee SA, known for creating advanced neuromorphic vision systems, has launched the GenX320 Event-based Metavision sensor. This is the first event-based vision sensor for ultra-low-power Edge AI vision devices. The fifth-generation Metavision sensor, with a compact 3x4mm die size, broadens the application of the company’s technology into numerous intelligent Edge market segments. These include augmented reality/virtual reality (AR/VR) headsets, security systems, monitoring and detection systems, touchless displays, eye tracking features, always-on smart Internet of Things (IoT) devices, and more.

The GenX320 event-based vision sensor builds on its established success and expertise in event-based vision technology. This technology is known for its speed, low latency, dynamic range, power efficiency, and privacy advantages across various applications.

Featuring a 320×320 resolution with a 6.3μm pixel backside-illuminated (BSI) stacked design, the sensor offers a compact 1/5″ optical format. It’s specifically tailored to meet the needs of efficient integration in energy-, compute-, and size-constrained embedded vision systems at the edge. The sensor enables high-speed, robust vision capabilities with ultra-low power consumption, performing well even in difficult operating and lighting conditions.

The event-based vision sensor features low latency microsecond resolution timestamping of events with flexible data formatting. It incorporates on-chip intelligent power management modes, reducing power consumption to as low as 36uW, and includes smart wake-on-events functionality, deep sleep, and standby modes. The sensor ensures easy integration with standard System on Chips (SoCs) through multiple integrated event data preprocessing, filtering, and formatting functions, minimizing the need for external processing. It offers MIPI or CPI data output interfaces for low-latency connectivity with embedded processing platforms, including low-power microcontrollers and modern neuromorphic processor architectures. The sensor is AI-ready, with an on-chip histogram output compatible with multiple AI accelerators. It ensures sensor-level privacy due to its sparse, frameless event data, which naturally omits static scenes.

For more information, click here.