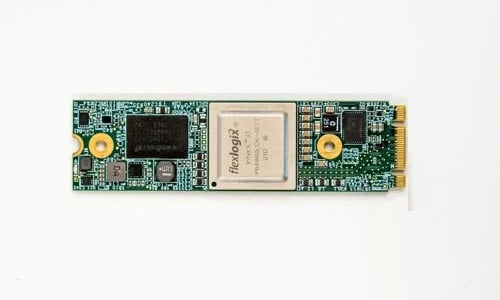

Flex Logix Technologies, one of the leading suppliers of embedded FPGA(eFPGA) modules have announced the production availability of its INFERX X1M boards. This eFPGA module comes in a compact M.2 form factor and offers an edge logic accelerator. The small form-factor and high-performance interference capability make it suitable for edge Ai applications such as industrial machine vision, Smart wearables, image processing, robotic vision, and analytics.

FPGA can provide great design flexibility, and high performance at relatively low power requirements, making it suitable for edge AI applications. The INFERX X1M features Flex Logix’s InferX X1 edge inference accelerator, which according to the company is one of the most efficient AI inference accelerations for advanced edge AI workloads, capable of handling high-resolution imaging processing capability and low power object detection required for applications in edge servers and industrial vision systems.

According to the company, Flex Logix’s eFPGA platform improves the speed of key workloads by 30x to 100x compared to a processor. It also enables chips to handle changing algorithms, protocols, standards, and user needs thus improving efficiency. The module has a low power requirement of 8.25W with current consumption of just 2.5A.

“With the general availability of our X1M board, customers designing edge servers and industrial vision systems can now incorporate superior AI inference capabilities with high accuracy, high throughput, and low power on complex models,” said Dana McCarty, Vice President of Sales and Marketing for Flex Logix’s Inference Products. “By incorporating an X1M board, customers can not only design new and exciting new AI capabilities into their systems, but they also have a faster path to production ramp versus designing their own custom card design.”

The company provides a software suite for the module which includes a simple runtime framework to support inference processing in both Windows and Linux operating systems. There is also a tool to port trained Open Neural Network eXchange (ONNX) models to run on the X1M, the software suite also includes an InferX X1 driver with external APIs and internal APIs to easily configure and deploy models and handle low-level functions designed to control and monitor the X1M board. This lets the users access features such as control logic without the need of understanding hardware development languages or manually reprogram the FPGA bitstream.

<hr/ >