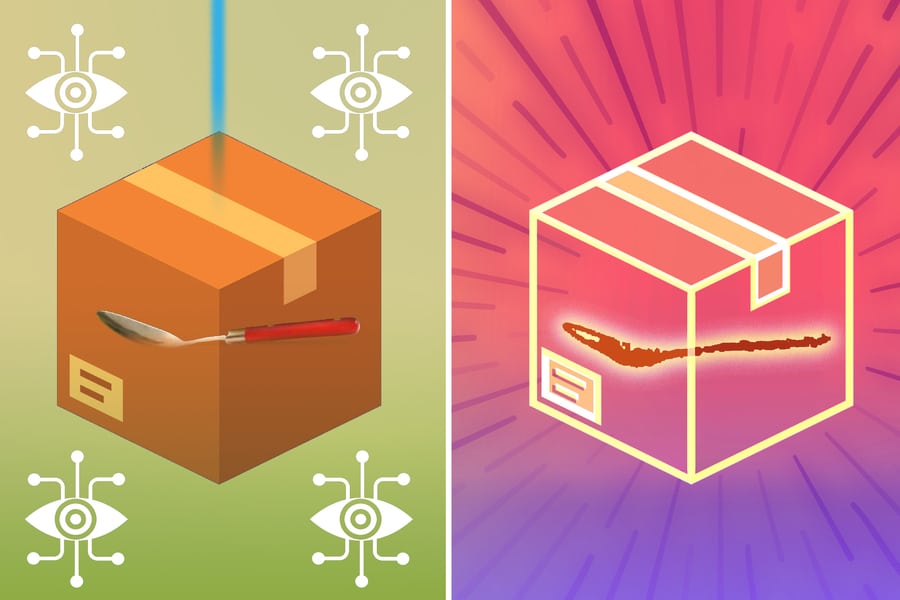

MIT’s new mmNorm technology lets robots detect and reconstruct hidden objects through packaging, promising major advances in warehouse inspection, factory automation, and home robotics.

The system uses millimeter wave (mmWave) signals—similar to those in Wi-Fi—to penetrate packaging material and reflect off concealed objects. These reflections are processed by an algorithm that reconstructs the object’s 3D shape with remarkable accuracy, even when traditional cameras or sensors fail.

Unlike conventional radar, which struggles with fine details, mmNorm estimates the surface normal—the orientation of an object’s surface at specific points. By combining these normals using a new mathematical model, it reconstructs a precise 3D profile of the hidden object.

Researchers mounted a radar on a robotic arm, which moves around an object and records signal strengths. Each radar antenna contributes a “vote” based on signal reflection strength, helping determine the surface shape. A 3D function then selects the most likely surface geometry.

Performance That Outpaces the Competition

mmNorm achieved 96% reconstruction accuracy, significantly outperforming current methods that manage only 78%. It works across a wide range of materials—plastic, glass, wood, metal, and rubber—and can even distinguish between objects like a fork, knife, and spoon placed together.

The mmNorm technology opens up a wide range of applications across industries. In factories, it can enable robots to identify and accurately grasp tools hidden inside closed drawers, improving efficiency and reducing errors in automated workflows. In home settings, smart robots equipped with mmNorm could safely locate and handle everyday items, making domestic automation more practical and intuitive. The security sector stands to benefit as well, with enhanced scanning capabilities for detecting concealed objects in airport baggage or during defense operations. In the realm of augmented and virtual reality, mmNorm could eventually allow workers to visualize fully hidden or occluded objects through AR headsets, adding a new layer of spatial awareness in industrial environments.

The MIT team plans to improve resolution, optimize performance for low-reflectivity objects, and enhance imaging through denser materials. This research, supported by the NSF, Microsoft, and the MIT Media Lab, marks a major leap in robotic perception and 3D imaging.