- This innovation addresses the global GPU shortage while maximising the utilisation of current computational assets.

- New parallel processing technology for seamless program transitions within High-Performance Computing (HPC) systems, enabling instant initiation of resource-intensive applications.

Fujitsu unveiled the world’s inaugural technology for dynamic resource allocation between CPUs and GPUs in real time. This innovation prioritises processes with exceptional execution efficiency, even when GPU-intensive programs are in operation. This aims to tackle the ongoing worldwide GPU shortage driven by skyrocketing demand for generative AI, deep learning, and various other applications while maximising the utilisation of users’ current computational assets.

In addition, the company introduced parallel processing technology that seamlessly transitions between multiple program executions in real-time within a High-Performance Computing (HPC) system, connecting numerous computers for large-scale computations. This advancement enables instant initiation of resource-intensive applications, such as digital twin simulations and generative AI tasks, without waiting to complete an ongoing program. The company plans to integrate this technology into an upcoming computer workload broker, presently in development. This software initiative empowers AI to autonomously determine and select the most suitable computing resources for customers’ problem-solving needs, considering computation time, accuracy, and cost. It will persistently collaborate with customers to validate this technology, aiming to establish a platform capable of addressing societal challenges and fostering innovation for a sustainable future.

Features of new technology

The company has achieved a pioneering milestone by creating the world’s inaugural technology capable of differentiating between programs suitable for GPU utilisation and those compatible with CPU processing, even amidst concurrent program execution. This achievement is realised through predictive acceleration rate assessment and real-time GPU allocation for prioritised program processing.

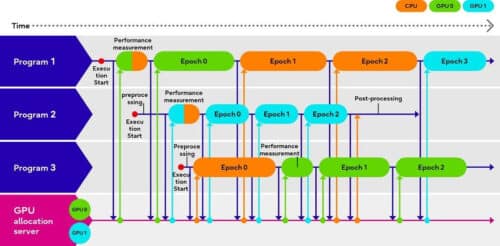

The figure illustrates a scenario where a user aims to manage three programs efficiently using one CPU and two GPUs. Assigning GPUs to programs 1 and 2 is feasible based on GPU availability. Subsequently, upon receiving a request from Program 3, the GPU allocation shifts from program 1 to program 3 for performance evaluation, gauging the extent of GPU-driven acceleration. Following the measurement, it becomes evident that allocating the GPU to program 3, rather than program 1, would reduce processing time. Consequently, the GPU gets allocated to program 3, while the CPU is designated for program 1 during this period. Once program 2 completes its execution, the GPU becomes available again, enabling a return to allocating the GPU to program 1. In this manner, computational resources are judiciously allocated to ensure the swiftest completion of program processing.

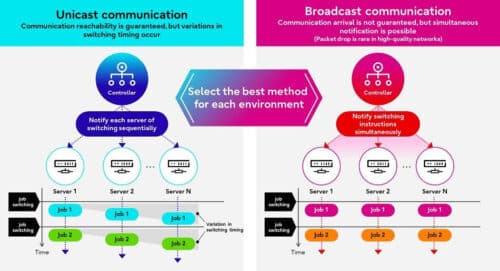

Unlike conventional methods that employ unicast communication, which sequentially switches program execution to each server, this breakthrough leverages broadcast communication to enable real-time batch switching of program execution. This reduces the interval between program processing switches that impact program performance from several seconds to a mere 100 milliseconds in a 256-node HPC environment. The communication method, whether broadcast or unicast, can be tailored to application requirements and network quality, considering performance improvement gains and potential performance degradation due to packet loss. This technology accelerates the execution of applications demanding real-time performance in areas such as digital twins, generative AI, and materials and drug discovery, harnessing the capabilities of HPC-like computational resources.

The company mentions that it intends to implement the CPU/GPU resource optimisation technology in its Fujitsu Kozuchi (code name) – AI Platform in the coming phases. This platform allows users to experiment swiftly with advanced AI technologies requiring GPUs. Additionally, the HPC optimisation technology will find application in Fujitsu’s 40-qubit quantum computer simulator, facilitating collaborative computing across many nodes. It will explore opportunities for utilising its Computing as a Service HPC, which empowers users to develop and execute applications related to simulation, AI, and combinatorial optimisation problems. This will extend to the Composable Disaggregated Infrastructure (CDI) architecture, which enables server hardware configuration changes. These endeavours aim to foster a society where accessible, cost-effective, and high-performance computing resources are readily available.