Humans and machines behave in very different ways when it comes to doing simple tasks. That does not mean both cannot work together, as proven by the following experiment where a robot learns how to recreate the hand movements of a human when grabbing an object.

Automated mechanical systems, also referred to as robots, are quite capable of performing complex tasks more efficiently than humans. But, when it comes to carrying out an action as simple as holding an object of varying shape, size, and weight, unlike humans, they are unable to process this information. Hence, it results in the application of a single, uniform force while grasping different objects. This also prevents a smooth human-to-robot object handover.

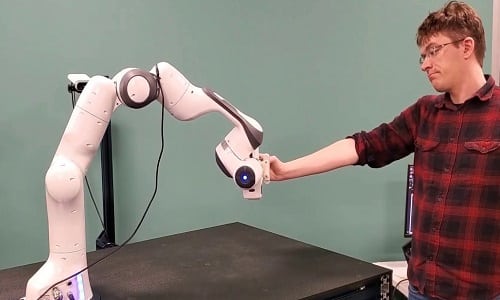

To rectify these underlying issues, NVIDIA researchers in the Seattle AI Robotics Research Lab have developed a human-to-robot handover method wherein robots can gradually develop a perception system to accurately identify objects and accordingly, execute hand movements.

Learning via Neural Networks

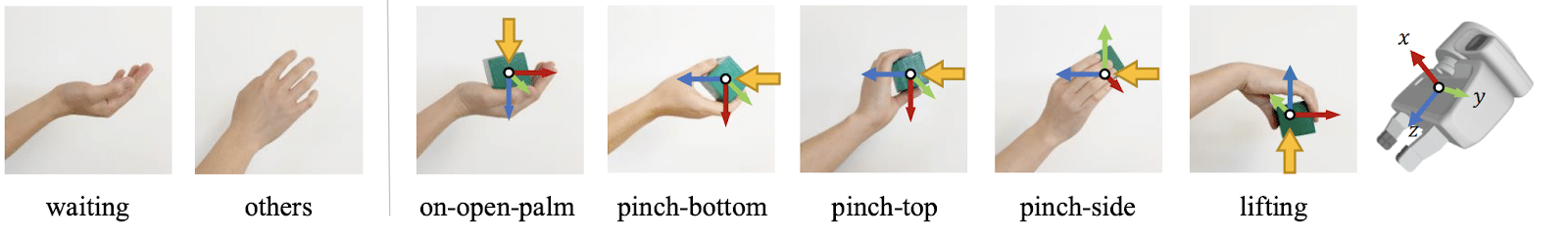

To begin with, the research team first defined a set of hand grasping movements such as on-open-palm, pinch-bottom, pinch-top, pinch-side or lifting. This was done to help a robot learn how a particular object is handled by a human.

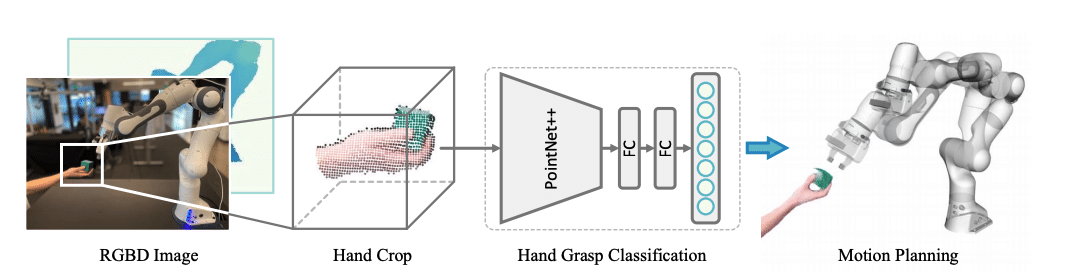

Then, with the help of a deep neural network, these sets were fed into a cloud system so that predictions could be made by robots as to how humans hold certain objects and thus, which hand grasping movement should be selected. This dataset, composed of eight subjects with various hand shapes and hand poses was created using a Microsoft Azure Kinect RGBD camera.

Seamless Operation

Next, the researchers made the orientation of the robot’s grasp similar to a human’s grasp. They implemented this using the PointNet++ architecture. The team gave the robot canonical grasp directions, which further made the robot’s motion and trajectory as natural as possible.

In conclusion, the system consistently improved its grasp success rate while reducing the total execution time, which proved the efficacy and reliability of this method.

Applications

The system results would greatly benefit warehouse robots, as well as kitchen robots, to fluently complete the handover (of objects) and thereby, better interact and coordinate with humans.

Despite the success, the team plans to introduce additional grasping techniques in the dataset so that the robot can learn and improve even more.

Read here for more.