The current state of the semiconductor industry worldwide is becoming digital, and semiconductors are the underlying technology powering this innovation. The need for exponentially more computing power is being met through innovations, new discoveries, and disruptions. But with increasing use of software-defined equipment, the importance of underlying hardware cannot be ignored.

The traditional fixed-function application-specific integrated circuits (ASICs) hold back network flexibility and prevent the developer from deciding how the network will work. Switch equipment manufacturers have to hard code that functionality into the silicon, which results in generic fixed protocols that aren’t suited to every situation. As a result, anytime network administrators need to update their switch protocols, they have to replace the hardware in order to do so.

“The significant jump in performance is due to amazing innovations. We are capable of moving electrons through the channel faster by using increased strain and more low-resistant materials. We are also delivering better energy control through novel high-density patterning techniques and some streamlined structures, and we’re going to be enabling improved power delivery and better routing with higher metal stacks,” says Nick McKeown, SVP/GM of the Network and Edge Group at Intel. Hence, it’s safe to say that semiconductor innovation and Moore’s law are alive.

Now, with technological advancement, we have a programmable switch ASIC and network owners can deploy new protocols as fast as they can develop them through the software layer by just downloading them in the field. As a result, network owners can update their switch functionality to keep pace with new cloud based workloads without having to replace the hardware.

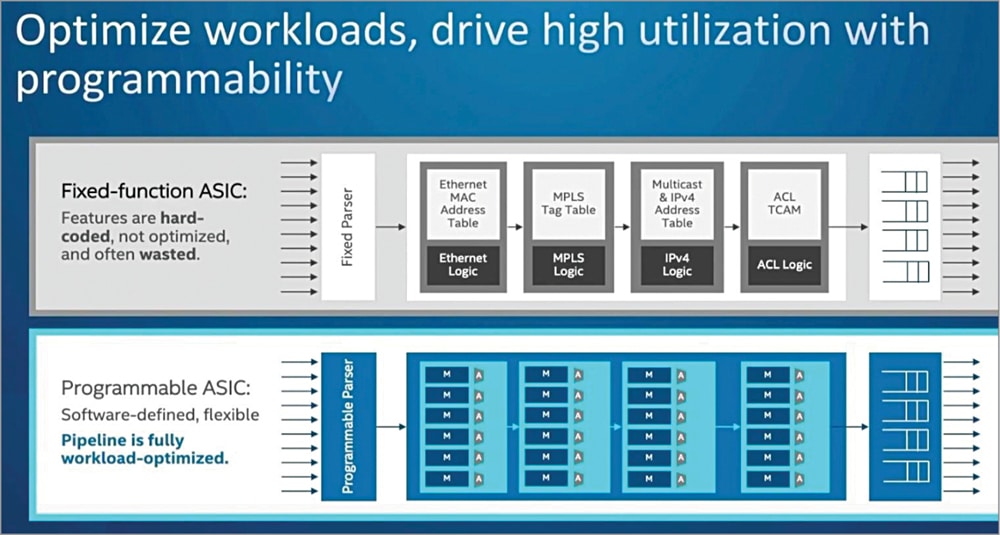

The diagram in Fig. 1 shows the difference between fixed-function ASICs and programmable ASICs. The Tofino pipeline uses the same fee-forward multiple-stage pipeline architecture similar to a fixed-function ASIC, but with Tofino, all the stages are fully programmable.

The flow of traffic between a fixed and programmable chip may be the same, but when the chip is programmable, the parser deposer and the pipeline stages can be programmed by the user or by the developer. Network architects have total control to decide what features, functions, and protocols are supported within their network, and it all runs at a rate with the same powerful performance and cost.

|

The four superpowers that are driving unprecedented semiconductor demand across the industry

|

|

Transformation in network and edge platform

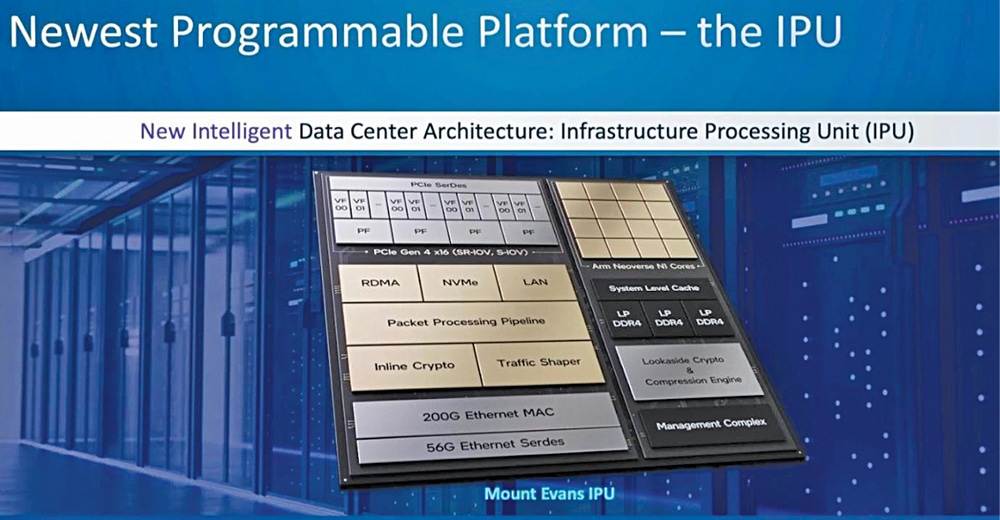

The fixed-function solutions that were replaced by the newest programmable platform for network and edge workloads are the infrastructure processor unit, or the IPU. It is helpful for people who are operating the world’s largest cloud data centres and cloud service providers.

The CPUs are used for two different purposes. One, CPUs run the software infrastructure that keeps the data centre running, and two, a larger set of CPUs run the tenant’s workloads. It is extremely important that the tenant workloads, over which the cloud providers have no control, don’t disrupt either the infrastructure itself or the other tenants. Presently, it is hard to protect one from the other if they both run on the same CPU cores.

The job of the IPU is to run the infrastructure workloads on a separate, secure, and isolated set of CPU cores. Now, the IPU is versatile and programmable. It’s a programmable platform of its own. Not only does it have an array of CPU cores, but it also has a packet processing pipeline that is programmable like Tofino using the p4 language as well as a variety of accelerators, such as encryption and compression, which are also available for the developer to program for themselves.

It is important for this new class of computing devices to be open and programmable as it helps companies keep improving and involving their data centres without being locked into a single solution. In fact, by making the system open, the IPDK open development environment helps make the entire ecosystem bigger for the entire industry as a whole. The power of this programming framework will help you to develop a program with a description of the functionality and characteristics of a pipeline and compile it to whatever target is appropriate for the application.

For example, if you’re stringing together a number of workloads, you can target a host CPU via DPDK. This flexibility and uniformity are made possible through compiler-friendly programmable silicon designing chips, with a program in mind, and allowing them to write their own p4 programs to determine the forwarding behaviour that they need. This comes together under a common and open software framework that exposes open interfaces and attracts developers to invest in delivering platform solutions for a variety of verticals.

This can be deployed on whichever silicon platform is best suited for the particular vertical and use cases, whether it’s in the data centre, the network, or the edge. Across vertical markets, customers are demanding the platforms, the frameworks, and the developer tools that combine compute, storage, and networking to empower them to consolidate workloads, bring data together with applications, and perform real-time analytics.

The interesting opportunity is to deliver programmable networks that span the cloud out to the edge, where the programmable elements work together to improve the network performance, latency, and reliability from end to end. This requires a deep understanding of the workloads that our customers are seeking to deploy and optimise, and an inability to distill that implementation down to its essence, so it can be used for a variety of different workloads.

The more the silicon designers understand the workloads and the use cases, the more they implement the design with the software developer in mind. The more they develop as a compiler target, the more likely they are to deliver an optimised implementation that captures the essence and can really unlock the shift from fixed-function systems to software-defined systems.

Necessity of data-centre platform for specific 5G implementations

The 5G base station data centre switches to factory control systems and to old system retail outlets built from fixed-function hardware that are being replaced by systems which enable developers to program. The various functions and workloads are being lifted up and provide liberty to software developers to define them. In this way, software developers are able to continuously evolve, improve, and tailor systems to gain more control, flexibility, and security in their networks and edge systems in edge computing.

The industry is racing to analyse, process, and store data at the edge, where those new services are being delivered. This could be edge IoT applications running on a customer’s premise or in a Kono facility, or networking workloads running in a telecom data centre or an internet exchange point.

5G delivers flexibility via network functions running in the cloud, and has many ways to deliver multiple options for deployment models that range from public to private 5G enterprises. This explores deployment options to access connectivity and deliver use cases with a level of control and investment that they choose for themselves.

For example, public and private networks can work together to deliver connectivity directly to end users. Consider a smart sports stadium. The owners of the stadium can operate an on-premise network for dedicated connectivity and control the operations of the stadium. At the same time, public networks provide connectivity to the fans inside the stadium.

Enterprises can own a really high-capacity mobile network for themselves. The reliable one has lower latency and can support time-sensitive applications. Enterprises across whole different industries look to take advantage of new technologies like AI/machine learning, advanced robotics, augmented reality, and virtual reality. 5G private networks will allow enterprises to develop and deploy new applications, including real-time control and time-sensitive low-latency applications.

The market opportunity has two main components. One is the legacy fixed-function network market, which we expect to hold steady as we shift the market to run on server based solutions. The other is the network and edge infrastructure, where we expect to see enormous growth across cloud data centres, core networks, and edge computing segments.

Transition from fixed-function to fully-programmable

The programmable GPU devices have replaced the huge fixed-function graphics cards that were used at workstations with sophisticated SoCs. These new software based implementations enable the development and deployment of new algorithms in the field and a variety of new capabilities in software by developers.

Platforms are being transformed and horizontalised, and software is being disaggregated at the system level. Previously, big cloud providers used to build their own switches based on merchants. For example, switch a6 was added to Linux, and it replaced each box as per applications.

Today, all of the top 20 cloud service providers build their own networking equipment and write the software themselves. This reduces cost, gives them much more control, and they also have an army of software engineers to do it. More than half of all the core network workloads have already moved from proprietary fixed-function boxes into virtual workloads running on standard servers.

This has also started steadily in the radio access network (RAN), with the transition from ASIC based round boxes to virtual RAN or vRAN running in software equipment, such as Ericsson and Nokia moving from fixed silicon to CPUs running workloads in software. All early service provider VRAN deployments are running on Xeon and Flex RAN.

The uptake of VRAN is expected to grow from a relatively small percentage in 2021 to approaching a quarter of all deployments by 2025. At the same time, a lot of network software is moving to cloud native. A lot of people questioned whether you could really build a 5G network at the very highest performance from a software infrastructure. They felt it would run slower and consume more power, but it turns out that they were wrong.

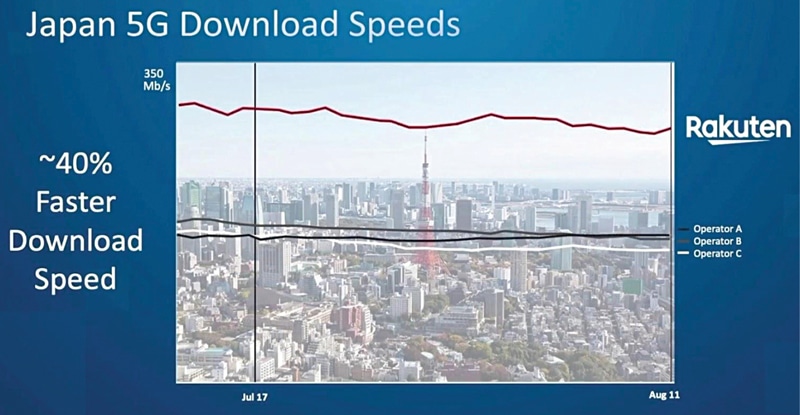

Rakatan’s recent study measured how quickly users could download data on different 5G networks. In Japan, right in downtown Tokyo at the busiest crosswalk in the world, Shibuya crossing, they found that Rakatan’s VRAN network has 40% faster speed than any other major download speed on a different Rakatan’s VRAN network. With a virtualised network running on software, no one would have predicted that the biggest mobile networks in the world would have become software-defined.

The last piece of the network RAN is moving towards software, as it’s driven by the need for flexibility, programmability, versatility, and the need to make these systems more reliable and more secure. Only those who operate networks at a huge scale understand how to do so, and only those who build and operate that infrastructure can quickly introduce new capabilities and differentiate from their other customers.

The modification from fixed-function hardware to software doesn’t stop at the network but is also implemented in factory automation systems, where a plethora of small embedded microcontrollers are being replaced by sensors and actuators that are centrally controlled by an open computing platform. Inference applications run on top of Kubernetes. Similarly, in warehouses, real-time in retail stores, and even in cars, computing is being centralised to create software-defined cars.

|

The four key takeaways from this article

|

|

Likewise, 5G is another interesting example, as it is intertwined across this entire landscape. 5G and 6G in the future are promising to unlock an industry of new applications, smart cities, new factory automation and pretty much autonomous everything. Because of its high bandwidth, low latency, and deterministic and strong security model, 5G is really an ideal platform for landing these new applications.

Increasing relevance of silicon with demand for software

All software runs on hardware. The silicon actually defines the performance, the power, and the ability to write programs and then compile them down to a target. Hence, contrary to the assumption that silicon is becoming less relevant, the fact remains that, with increased performance and scale that’s being driven by technologies like 5G and edge inference, silicon will become more relevant over time.

We need silicon that’s more performant than ever. Various acceleration technologies for security storage inference are going to be critical, and this is where we are going to continue to invest.

The author, Supriya Mangalpalli, is an Editorial Consultant at EFY