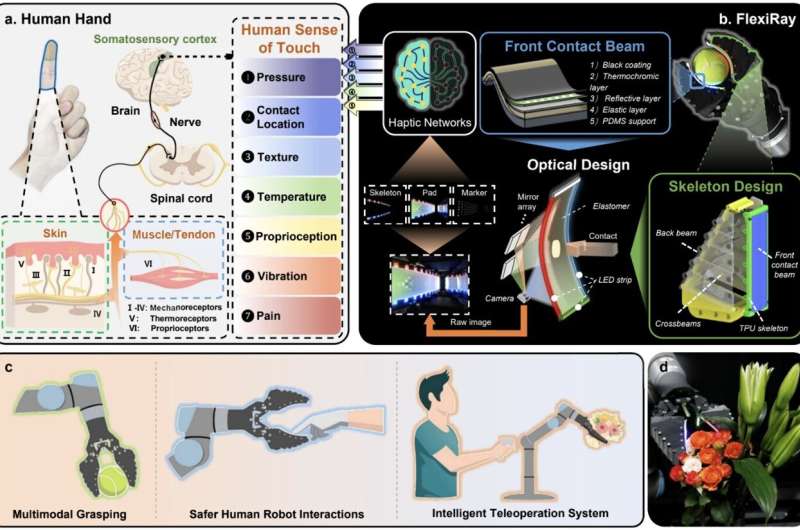

Zhejiang University researchers build a flexible robotic hand that literally “sees around corners,” combining deep learning and optics to deliver rich multimodal perception and human-like manipulation.

A new soft robotic hand prototype, dubbed FlexiRay, has demonstrated human-like tactile and visual perception by converting structural deformation into meaningful sensory data, addressing a core limitation in compliant robotics. The work could accelerate integration of soft robots into electronics assembly, delicate object handling, and human-robot interaction tasks.

Robots traditionally rely on stiff visual-tactile sensors/camera systems embedded in rigid bodies to detect contact and object properties. Although effective, these sensors constrain flexibility, making it difficult for robots to grasp fragile or irregularly shaped items without damage. FlexiRay flips this paradigm by leveraging soft materials and tailored optics to “look around corners” created by bending and deformation, preserving perception even during significant finger flexing.

At the heart of FlexiRay is a bio-inspired structure based on the Fin Ray Effect design that passively wraps around objects much like a fish fin. Inside each soft finger, a multi-mirror optical system paired with a single camera dynamically redirects its field of view in response to deformation, ensuring continuous sensing without obstruction. This innovation turns passive bendinga traditional sensory blind spotinto a mechanism for enhanced perception.

The prototype integrates machine vision and deep learning to interpret deformation patterns into rich tactile feedback. As a result, the robotic hand can detect force, contact location, texture, and even temperature with high coverageover 90 % effective sensing during large deformations in early tests. The approach captures multimodal cues that were previously difficult to achieve in flexible robot bodies without sacrificing safety or adaptability.

FlexiRay’s flexible “skin” combines thermochromic and reflective layers, enhancing data capture across modalities. Researchers believe this could make soft robots safer and more capable in environments involving delicate objects, from consumer electronics components to food products.

Looking ahead, the team plans to extend FlexiRay into multi-fingered hands for more complex tasks and merge the hardware with imitation learning frameworks, enabling robots to learn dexterity directly from human demonstrations using the rich sensory data the system provides. By leveraging deformation rather than avoiding it, FlexiRay underscores a shift in robotics design where compliance and perception co-evolve bringing soft robots closer to real-world utility in nuanced manipulation tasks.