Some time ago there were some very interesting product releases from the likes of Google and Microsoft. While Google announced a new assistant in Google Allo, which is quite friendly, Microsoft announced a puck-like device for artists working on Surface Pro devices. Google’s chat assistant on my phone read my personal messages and gave suggestions in reply.

Is this just a matter of word association, or an invasion of privacy? However, my intrigue began when a friend sent an image of his presence at a club. Allo showed some automatic reply options including, “Have a good time!” To me, it seemed like a fun and convenient way to reduce the hassle of typing out a full message.

Analysing images is a serious task

Photography is one area that employs image analytics heavily. Dr Joseph Reger, chief technology officer, Fujitsu EMEIA, explains, “Modern cameras identify faces to make focusing easier, or these can identify smiling faces or faces of pre-selected people.” Image analysis starts by first identifying edges and moves on to full shapes. However, making changes in certain key areas in the images has successfully fooled such systems. More on that later, first let us look at how a computer recognises and processes images.

Image analysis ranges from finding basic shapes, detecting edges, removing noise and counting objects, to finding anomalies in the routine. Security and surveillance are incomplete without an intelligent system that can detect pedestrians and vehicles, and make a difference. So how does image analytics work? How was Google’s new assistant able to analyse images and suggest possible replies as soon as I received the image?

Uncanny Vision, a startup focused on image analysis, helps clarify how it happens. Their system identifies objects in images using an analytical system powered by deep learning and smart algorithms. The deep learning framework, Caffe, powers this system. The framework managed by Berkeley Vision and Learning Center (BVLC) is capable of processing more than 60 million images in a single day using just one NVIDIA K40 GPU. On an average, this amounts to about 695 images per second, whereas we only need 17 to make a series of images into a video, with HD video going up to 60. Basically, this system can analyse about 11 HD videos at once.

Deep learning is affecting image analytics.

Deep learning is a branch of machine learning based on a set of algorithms that attempt to model high-level abstractions in data by using a deep graph with multiple processing layers, linear and non-linear. It is a representational learning approach ideally suited for image analysis challenges ranging from security and daily-purpose applications to medical problems.

Object detection is considered to be the most basic application of computer vision. Enhancements on top of object detection result in other developments including anomaly mapping and error detection in real-time imaging. However, deep learning is still an expanding topic. Dr Reger adds, “It is still unclear what the optimal depth of a deep learning net should be, or what the ideal component functions in the targeted approximation might be.”

Upgrading neural networks

It is no doubt that neural networks can identify and recognise patterns and do a lot of other interesting stuff. However, when we talk about real-time image analysis from multiple angles and lack of content in the frame, going beyond the capabilities of neural networks is required. One of the most stated advancement in this regard has been convolutional neural networks (CNNs). Self-driving cars, auto-tagging of friends in pictures, facial-security features, gesture recognition and automatic number plate recognition are some of the areas that benefit from CNNs.

Broadly, a CNN consists of three steps that can be divided into more steps. Starting with convolution, we go back to our engineering days, when we convolved a filter with input function to get some output. Padding was then added to make this result similar in size to the original image. You know the basics.

Pooling is done to reduce the size of data, and this makes it easier to sift through. Then, the networks use fully-connected layers where each pixel is considered as a separate neuron similar to a regular neural network.

Batch normalisation was another step in the process, which has become outdated with use. Better results over CNNs result in similar implementation as well.

Where we have seen CNN before.

Video analysis, image recognition and drug discovery are some of the common uses of CNNs around us. However, the one that brought CNNs to light would be AlphaGo programme by Google DeepMind. The Chinese game of Go is a complex board game requiring intuition, creative and strategic thinking. It has been a major subject in artificial intelligence (AI) research. In March 2016, Google’s AlphaGo beat Lee Sedol, a South Korean professional Go player of ‘9 dan’ rank by 4-1. And here we thought recognising and following a person in a crowd was amazing for a neural network.

Lesser-known image analytics

Image analysis can also be done using many other techniques. These can be as simple as a binary tree with a simple true-false decision, or as complex as structured prediction.

Decision tree, for example, is used by Microsoft Structured Query Language (SQL) server. Never thought we would be using analytics in SQL, did we? But when you think about how data is fetched in SQL, it makes a lot more sense.

Decision tree is a predictive modelling approach used in statistics, data mining and machine learning. An analogy for easier understanding would be the binary tree. Some of the popular implementations are IBM SPSS Modeler, RapidMiner, Microsoft SQL Server, MATLAB and the programming language R, among others.

In R, decision tree uses a complexity parameter to measure trade-offs between model complexity and accuracy on training set. A smaller complexity parameter leads to a bigger tree, and vice versa. If you have a smaller tree, it means that the model did not capture underlying trends properly, and the tree needs to be re-examined.

Divide and conquer to analyse.

Cluster analysis, on the other hand, categorises objects in test data into different groups or clusters. Test data can be grouped into clusters based on any number of parameters, resulting in multiple algorithms in cluster analysis. Clusters are formed based on your algorithm, making it a multi-objective optimisation problem.

Formation of clusters, however, is not successful with the current data analysis needs. With the introduction of the Internet of Things (IoT), data that needs to be analysed has reached much higher volume. Many methods fail due to the curse of dimensionality, resulting in many parameters being left out while optimising the algorithm.

If you can remove some parameters in clustering, removing it altogether is not a stretch. Dimension reduction is the process of reducing the number of random variables being considered. The set of principal variables is then processed through feature selection and extraction. Data is classified by filters based on the features to be added or removed while building the model based on prediction errors.

Feature extraction then transforms this high-dimensional data into fewer dimensions through principal component analysis, among other techniques. Application areas include neuroscience and searching on live video streams, among others, as removal of multi-collinearity improves the learning system.

Odd man out algorithms.

Used mostly in data mining, anomaly detection is another interesting algorithm. Anomalies, also referred to as outliers, novelties, noise, deviations and exceptions, can be a fancier version of the odd man out. Typical anomalies include bank frauds, structural defects, medical problems or errors in text. Image analysis by Uncanny Vision is an example. The system analyses the images through a CCTV and points out anomalies, with basics going down to a person falling.

Proper hardware support is also necessary

Neural networks have existed since the early 1990s, but initially success was very limited due to the restricted availability of hardware and inadequate learning methods. “Last decade has been a welcome change in this regard,” says Dr Reger. He adds, “Advanced learning algorithms and massive 16-bit FP performance in modern hardware have turned neural networks into an effective technology for image analysis.”

Movidius Myriad 2, Eyeriss and Microsoft HoloLens are some vision microprocessors being used in today’s vision-processing systems. These may include direct interfaces to take data from cameras with a greater emphasis on on-chip dataflow. These are different than regular video-processing units as these are suited more to running machine vision algorithms such as CNNs and scale-invariant feature transform (SIFT). Some of the application areas for vision processing include robotics, the IoT, digital cameras for virtual reality and augmented reality, and smartcameras.

Fooling an AI

Reports released last year suggested that changing some pixels in a photo of an elephant could fool a neural net into thinking it is a car. AI systems have committed some disturbing tasks as well. April Taylor from iTech Post has explained in an article how her dogs were categorised as horses.

Twitter user, jackyalcine, has also reported some funny business with Google AI. This has lead to Google’s chief social architect, Yonatan Zunger, releasing an apology and attempts to rectify the error, resulting in the removal of the tag altogether. The explanation behind the error came to light once it was released for public.

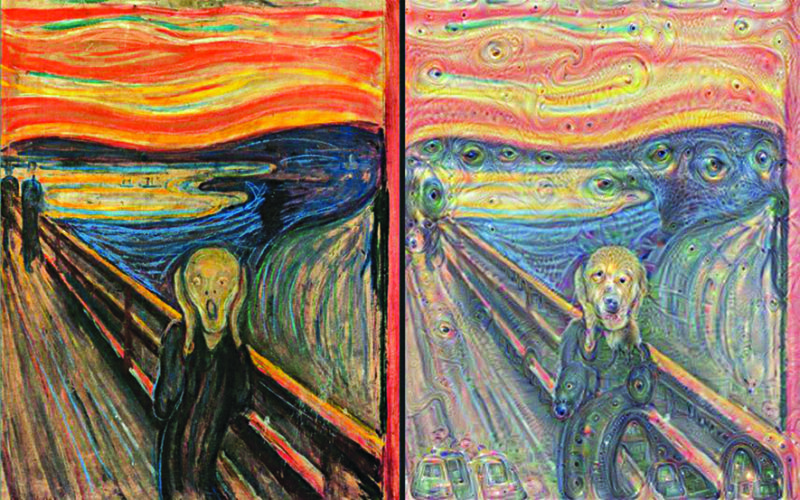

The problem with Google AI was training using animal images. Training an AI is a process to determine a large set of parameters that calibrates a function that maps image data to content. Results may vary based on the images used in training. Some sample images tested by enthusiasts gave some very creepy results. Even a relatively simple neural network over-interpreted an image, resulting in trippy images.

Engineers at Google’s research lab decided to check on patterns the system recognised and set up a system that tweaks the patterns to exaggerate the result. An image passed through enough times resulted in the image changing radically, and basically tripping some classy paintings.

How it looks

“After logic programming with languages like Prolog and expert systems, the present phase of advanced neural networks constitutes the third wave of AI,” says Dr Reger. But we are still decades away from a system that can get anywhere close to a realistic one. This brilliance of our human body is the reason why researchers have been trying to break the enigma of computer vision by analysing the visual mechanics of human beings or other animals.

Background clutter, hidden parts of images, difference in illumination and variations in viewpoint are some areas that require focus in development before we can move towards developing Jarvis. According to Dr Reger, “Neural networks in general and image analysis in particular will remain some of the most interesting and important topics in cutting-edge information technology in years to come.”