What is Neuromorphic Computing? What are its applications? And, what’s happening in India on this front? We were fortunate to get connected with Dr Shubham Sahay, Assistant Professor at IIT Kanpur, who specialises in neuromorphic computing and hardware security. Here are some extracts of his interaction with Aaryaa Padhyegurjar from EFY.

Q. In layman’s terms, how do you describe neuromorphic computing?

A. Let me give you a brief example. The most advanced machines that you see now are CPUs and GPUs in a server room. In these computing systems, memory and processing are two separate elements, two different entities. Every time you must process any data, you must send data back and forth between these two units. That transmission of data takes a lot of energy and creates a lot of delays. Typically, in your human brain, the architecture is such that the processing and the memory are co-located. In your brain, the whole architecture is different; it’s happening at the same time at the same place. For instance, as you’re listening to a conversation, you’re able to process it and, at the same time, some part of it is going into your memory. You’re able to recall something from the conversation. You encounter different types of smells in your surroundings, but you’re able to identify which is coming from which place. That is because you have such an efficient processing engine—your brain. In neuromorphic computing, you basically take inspiration from the principles of the brain and try to mimic those on hardware utilising knowledge from nanoelectronics and VLSI.

In semiconductor electronics, the passage of information takes place with the help of electrons. Electrons are fundamental particles that travel very fast. But in your brain, it is the calcium ions that carry the information from one neuron to another neuron via synaptic clefts. For ions to flow you must create a concentration difference, which makes it a very slow process. But even with such a slow process, you can perform everything so efficiently in real time and with such a small amount of energy. It is possible only because of the massive parallelism that our brain offers; computations are performed parallelly.

Even with its lower operating speed, neuromorphic computing is superior to what we’re currently using in our systems, and that’s only because of its inherent architecture.

Q. So how do we accomplish this?

A. We need to realise something like the processing elements in the brain on hardware, and memristors are one of the potential candidates (it would not be an exaggeration if I say most promising candidates) for realising processing entities such as neurons and synapses. Memristors basically can mimic synaptic elements. Apart from memristors, there are memtransistors as well, which are three-terminal devices, such as ferroelectric FETs, flash memories, etc. Nowadays, you also have all sorts of exotic materials coming into the picture—you have various devices showing neuron/synapse-like properties made up of organic substrates and even flexible substrates. But the most promising candidate, considering the present technical knowhow, is the memristor because it can be integrated with the present technology as well.

In theory, memristors existed in the 1960s itself, when a professor of UC Berkeley (Prof. Leon O. Chua) found out this missing element—the fourth fundamental element apart from resistors, inductors, and capacitors. Many people gave some theoretical models for a memristor. My postdoc advisor Prof. Dmitri Strukov was among the people who invented memristors. He fabricated a memristor and proved that it shows all the properties (such as plasticity, STDP learning rule, etc) which could mimic the processing elements such as synapses and neurons in the human brain and could be harnessed for neuromorphic computing.

In theory, memristors existed in the 1960s itself, when a professor of UC Berkeley (Prof. Leon O. Chua) found out this missing element—the fourth fundamental element apart from resistors, inductors, and capacitors. Many people gave some theoretical models for a memristor. My postdoc advisor Prof. Dmitri Strukov was among the people who invented memristors. He fabricated a memristor and proved that it shows all the properties (such as plasticity, STDP learning rule, etc) which could mimic the processing elements such as synapses and neurons in the human brain and could be harnessed for neuromorphic computing.

Q. You worked on a neuromorphic chip at UCSB just two years ago. Could you elaborate on your experience there and what your team worked on?

A. The whole world is going through an artificial intelligence revolution. Neural networks are the driving force behind the artificial intelligence revolution. Neural networks exist in different flavours—artificial neural networks, SNNs, DNNs, CNNs, etc, which are efficient in performing different applications. However, the fundamental operation in all the neural networks is the vector-by-matrix multiplication or, in other words, multiply-accumulate operation. If we can somehow accelerate the vector-by-matrix multiplication, if we can execute this operation in an energy-efficient way, then we can realise ultra-low power hardware implementation of all neural networks. This is something that our group at UCSB realised and we developed different circuits and systems exploiting emerging non-volatile memories to perform vector-by-matrix multiplication in an efficient manner.

We utilised two kinds of non-volatile memories that were technologically advanced—one of them was flash memory. Flash memory is ubiquitous nowadays, ranging from memory cards and USB sticks to even cloud storage. In almost all devices in the internet of things (IoT) ecosystem, you’ll find flash memory. They are already technologically mature. So, if these devices could be used for neuromorphic computing, if they could process information themselves, it would be one of the milestones in realising an ultra-energy-efficient green society. Currently, flash memories have the capability to store the data but there doesn’t exist a conventional circuit or architecture with which you can make them process this data.

We utilised two kinds of non-volatile memories that were technologically advanced—one of them was flash memory. Flash memory is ubiquitous nowadays, ranging from memory cards and USB sticks to even cloud storage. In almost all devices in the internet of things (IoT) ecosystem, you’ll find flash memory. They are already technologically mature. So, if these devices could be used for neuromorphic computing, if they could process information themselves, it would be one of the milestones in realising an ultra-energy-efficient green society. Currently, flash memories have the capability to store the data but there doesn’t exist a conventional circuit or architecture with which you can make them process this data.

Another advantage of flash devices is that they are very dense. You can just imagine the density by looking at this scenario: In 2010, when I was purchasing my first phone, the internal memory was of the order of a few MB. And now, if you go for any smartphone, the internal memory (which is again a flash memory) is at least 64 or 128GB. The density has increased by more than a thousand times. So, if we can introduce processing power to flash, we can enable all smart devices to compute in-situ.

I also worked with oxide based resistive RAMs or memristors. We found out that they were even more promising with respect to energy efficiency. Probably, they will be able to achieve the levels of efficiency of the human brain for certain applications soon. We were collaborating with Applied Materials and Philips, who agreed to fabricate those chips for us.

I also worked with oxide based resistive RAMs or memristors. We found out that they were even more promising with respect to energy efficiency. Probably, they will be able to achieve the levels of efficiency of the human brain for certain applications soon. We were collaborating with Applied Materials and Philips, who agreed to fabricate those chips for us.

Q. You take a class on neuromorphic computing at IIT Kanpur. And you also have a research group called NeuroCHaSe. How has been the response of students in your class?

A. I introduced this course at IIT Kanpur immediately after I joined (one year ago). Although it was a PG-level course, I saw great participation from the UGs. In general, in PG courses, there are around 15-20 students. But in this course, there were 55 students, out of which around 20 students were from B.Tech. That was a very encouraging response and throughout the course they were very interactive. The huge B.Tech participation in my course bears testimony to the fact that undergrads are really interested in this topic. I believe that this could be introduced as one of the core courses in the UG curriculum in the future.

During the course, I introduced some principles of neurobiology—how information is processed in the brain for a specific application like vision. Then I discussed how it can be mimicked on hardware utilising emerging non-volatile memories. We discussed all the other candidates that could be used as neural processing elements, apart from memristors—flash memories, magnetic memory devices, SRAMs, DRAMs, phase change memories, etc. I also talked about all memory technologies that exist right now and evaluated their potential for neuromorphic computing. Moreover, based on the students’ feedback for this course, I got a commendation letter from the director for excellence in teaching.

Our NeuroCHaSe research group is also actively working on the development of neuromorphic hardware platforms and ensuring their security against adversary attacks. We are also going to tape out (fabricate) a 3D-neuromorphic computing IC soon. Stay tuned for the news!

Our NeuroCHaSe research group is also actively working on the development of neuromorphic hardware platforms and ensuring their security against adversary attacks. We are also going to tape out (fabricate) a 3D-neuromorphic computing IC soon. Stay tuned for the news!

Q. Apart from IIT Kanpur, what opportunities exist in India? What does the future look like for Indians?

A. As of now, the knowledge, dissemination and expertise in this area is limited to the eminent institutes such as IITs and IISc, but with government initiatives such as India Semiconductor Mission (ISM) and NITI Ayog’s national strategy for artificial intelligence (#AIforall), this field would get a major boost. Also, with respect to opportunities in the industry, several MNCs have memory divisions in India—such as Micron, Sandisk (WesternDigital), etc—that develop the memory elements which will be used for neuromorphic computing.

There are also many startups working on the development of neuromorphic ICs. One of them is Ceremorphic India Pvt Ltd. They are working on SRAM based neuromorphic computing systems. With the establishment of ISM, I expect more companies to eventually start their neuromorphic teams in India.

Q. How will it affect normal people and the entire B2B marketplace? Or do we still have time for neuromorphic chips to come to the market?

A. A lot of companies are already fabricating these chips. Almost all companies have a dedicated team working on neuromorphic computing. These chips are already present in some products as well. If you look at Apple’s latest iPhone, they have a chip called M1, which is an AI processor. This is not something that is far off into the future.

In terms of its impact on common people, just consider the field of healthcare. You need to get a lot of data from the patients for the development of personalised medicine, genome sequencing, etc. While the current sensor technology is efficient in extracting such data, processing it in real time is the bottleneck. Neuromorphic computing would enable processing capabilities within the sensors themselves. Therefore, in the future, we may expect significant developments in critical fields like personalised medicine, genome sequencing, etc, fuelled by the massive computing power of neuromorphic hardware.

Not just the healthcare sector, even for drones or warfare systems, the major problem is their processing capability. Their camera captures good quality images but extracting information from the images in real time is the bottleneck. Drones are connected to Wi-Fi and send images to the base station. The base station processes the data and sends the signals back. This is a power-hungry process and can be intercepted too. If the drone itself has the capacity to process the data, it can take immediate action. Just imagine how it can transform the world.

Also, we use all sorts of applications like Alexa, Siri, etc, but they do not work without Wi-Fi. The main problem with these systems is that their processing power is limited. Whenever we give them any input, they just transfer it to the server, where the processing happens. The output comes from the server to the device, and the device simply gives us the output. If you can give such processing power to all the energy-constrained smart devices around you, communication between the server and the device is not needed. In such a scenario, we not only make these devices compute independently but we also reduce the cloud dependence, which is a major source of security vulnerabilities and adversary attacks.

Also, we use all sorts of applications like Alexa, Siri, etc, but they do not work without Wi-Fi. The main problem with these systems is that their processing power is limited. Whenever we give them any input, they just transfer it to the server, where the processing happens. The output comes from the server to the device, and the device simply gives us the output. If you can give such processing power to all the energy-constrained smart devices around you, communication between the server and the device is not needed. In such a scenario, we not only make these devices compute independently but we also reduce the cloud dependence, which is a major source of security vulnerabilities and adversary attacks.

Even in space, rovers capture images and send them back to the earth station for processing. If an energy-efficient processing system is installed there, we’ll have Mars rovers and Moon rovers driving on their own. Autonomous driving is not fiction anymore. It just requires an energy-efficient processor to do that, and that is what neuromorphic computing is all about.

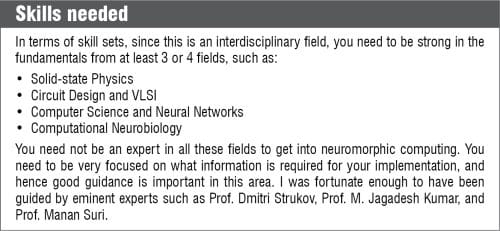

Dr Shubham Sahay with a few members of NeuroCHaSe Research Group on the IIT Kanpur campus