A new AI-driven light-field technique breaks long-standing limits in autostereoscopic displays, bringing wide-angle, glasses-free 3D closer to desktop deployment.

A research team in Shanghai has unveiled an autostereoscopic display system that delivers full-parallax, glasses-free 3D on a desktop-scale screenan achievement long considered out of reach due to the constraints of optical physics. The prototype, detailed in Nature, introduces an AI-optimized architecture that sidesteps the traditional Space-Bandwidth Product (SBP) trade-off, the rule that has historically forced developers to choose between either a large image size or a wide viewing zone.

SBP is the primary bottleneck for consumer-grade naked-eye 3D. It limits how many light rays a display can direct across space while maintaining high fidelity as a viewer shifts position. Earlier attempts to enlarge both field-of-view and image size simultaneously have consistently hit this physical ceiling. The new system dubbed EyeReal in the studybreaks with previous approaches by reallocating, not expanding, the available optical information.

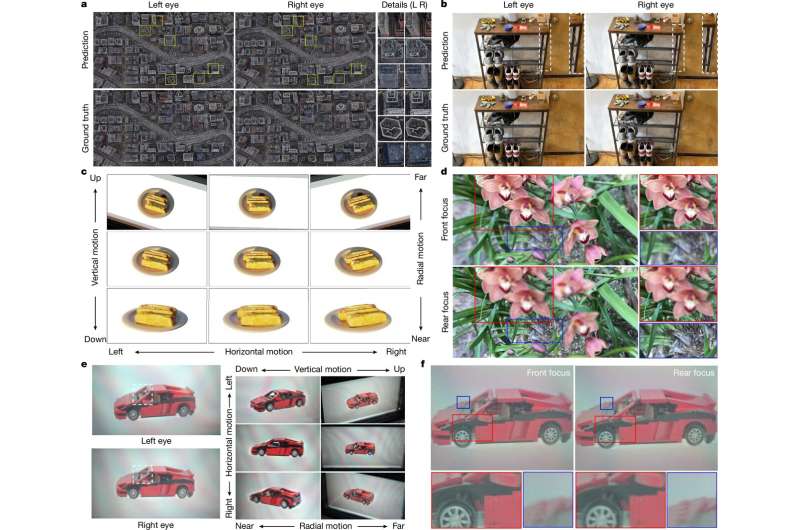

Instead of broadcasting a full light-field across the entire viewing region, the display uses a deep-learning pipeline to compute only the light rays necessary for the viewer’s exact eye position. A head-and-eye-tracking sensor continuously estimates gaze direction, while the AI engine generates the corresponding light-field pattern in real time. This targeted rendering means the display no longer “wastes” rays on unused angles, effectively stretching the functional bandwidth without violating the underlying physics.

The hardware stack is deliberately pragmatic: three standard LCD layers for light modulation and a compact tracking module feed inputs to the neural network, which handles reconstruction. No lenticular lenses, diffractive optics, or holographic modules are required, positioning the system closer to manufacturable pathways than many earlier holographic or multilayer light-field concepts.

In lab tests, the researchers validated a viewing angle exceeding 100 degrees and demonstrated continuous, natural motion parallax across both synthetic and photographic scenes. Depth cues remained stable as users moved their head or refocused within the scene, delivering the kind of adaptive 3D response typically associated with active-glasses systems.The team is now working on scaling the AI pipeline to support simultaneous multi-viewer experiences a critical step toward broader applications in entertainment, industrial visualization, and human–machine interfaces.