A new Universität Klagenfurt algorithm lets robots instantly recognize, model, and integrate any sensor—eliminating manual calibration and speeding up deployment in drones, autonomous vehicles, and industrial systems.

Robotic systems—whether flying drones, self-driving cars, or industrial machines—rely on multiple sensors to understand and navigate the world. Traditionally, adding a new sensor, such as a GPS, magnetometer, or accelerometer, meant tedious manual calibration and expert-driven integration. Now, a research team from Universität Klagenfurt has developed a breakthrough method that automates this entire process.

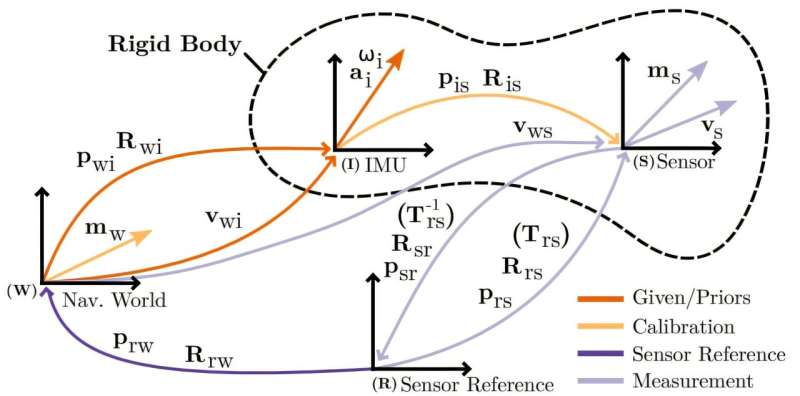

The new algorithm, created by Christian Brommer, Alessandro Fornasier, Jan Steinbrener, and Stephan Weiss, allows a robot to instantly recognize the type of sensor connected to it, determine its position and orientation, and integrate it seamlessly into the navigation system—without requiring prior knowledge of the sensor. Whether it’s a magnetic field vector, a velocity sensor, or a GPS unit, the system identifies the sensor’s mathematical model on its own.

This approach eliminates the bottleneck of manual setup. Developers no longer need to dig into datasheets or spend hours fine-tuning calibration parameters. The algorithm simply receives the raw data stream, observes how it changes with motion, and automatically infers the correct model.In practice, only minimal movement is required for accurate detection—holding a device in your hand, a quick quadcopter flight, or a short car drive is enough. Once identified, the sensor can immediately contribute to real-time localization and control.

The impact could be significant. On GitHub alone, more than 14,000 developer requests mention “sensor model integration,” underscoring the demand for faster, more reliable methods. The Klagenfurt team’s solution promises to speed up prototyping, reduce engineering costs, and make robotics systems more adaptable to hardware changes—critical in fields where hardware configurations often evolve rapidly.

By publishing their work in IEEE Transactions on Robotics, the researchers have opened the door for broader adoption in both academic and commercial projects. As Brommer notes, the goal is simple but powerful: “Make sensor integration into localization solutions easier, faster, and more robust.” From simplifying drone upgrades to streamlining autonomous vehicle sensor swaps, this self-learning algorithm could reshape how robots perceive and adapt to their environment—no human calibration wizardry required.