What if AI models no longer needed the cloud? A new chip brings compute to laptops and edge devices, shifting where intelligence runs.

Running large AI models has mostly been limited to cloud data centers. Devices like laptops or edge systems could not support the compute and power requirements. This created issues of cost, latency, and data security.

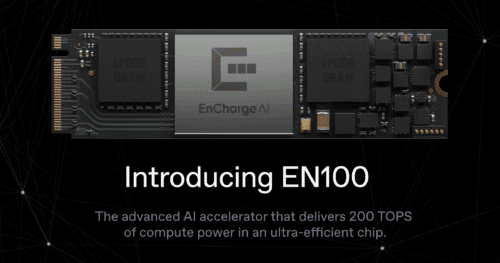

EnCharge AI has introduced the EN100 accelerator to run AI inference directly on local devices. Based on analog in-memory computing, it provides 200+ TOPS of compute power within the limits of laptops, workstations, and edge platforms. Developers can use it to build applications that do not depend on cloud infrastructure.

The chip offers up to 20x better performance per watt compared to other options. It supports generative models, real-time computer vision, 128GB LPDDR memory, and 272 GB/s bandwidth.

On the software side, it supports PyTorch, TensorFlow, and other frameworks, with compilers and optimization tools. The architecture is programmable for present and future AI models.

“EN100 represents a fundamental shift in AI computing architecture, rooted in hardware and software innovations that have been de-risked through fundamental research spanning multiple generations of silicon development,” said Naveen Verma, CEO at EnCharge AI. “These innovations are now being made available as products for the industry to use, as scalable, programmable AI inference solutions that break through the energy efficiency limits of today’s digital solutions. This means advanced, secure, and personalized AI can run locally, without relying on cloud infrastructure. We hope this will radically expand what you can do with AI.”

“The real magic of EN100 is that it makes transformative efficiency for AI inference easily accessible to our partners, which can be used to help them achieve their ambitious AI roadmaps,” says Ram Rangarajan, Senior Vice President of Product and Strategy at EnCharge AI. “For client platforms, EN100 can bring sophisticated AI capabilities on device, enabling a new generation of intelligent applications that are not only faster and more responsive but also more secure and personalized.”