Running large AI models is hard. This chip can help you run them faster, scale better, and cut delays in real cloud applications.

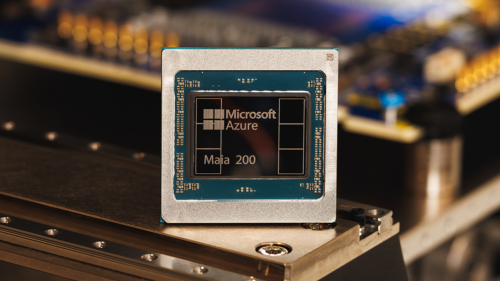

Running large AI models in production is becoming harder because inference workloads need high compute, fast memory access, and large-scale networking. Many cloud users struggle with performance limits, power use, and scaling. Microsoft has designed Maia 200 to solve these problems for teams deploying AI models in real applications. The accelerator focuses on inference and combines high compute performance, a newly designed memory system, and a scalable networking architecture. These chips prepare Microsoft’s global cloud infrastructure for the next generation of AI workloads and integrate directly into Microsoft Azure.

Maia 200 delivers over 10 petaFLOPS at 4-bit precision and more than 5 petaFLOPS at 8-bit precision using 3-nanometer process technology. This allows a single accelerator to run today’s largest AI models while leaving headroom for even larger models in the future. Its networking design scales over standard Ethernet to clusters of up to 6,144 AI accelerators, solving the challenge of building and managing large inference systems.

The first deployments will be in U.S. regions of the Microsoft Azure cloud, where Maia 200 will support AI models from the Microsoft Superintelligence team. It will accelerate platforms such as Azure AI Foundry, Microsoft’s integrated and interoperable AI platform for developing AI applications and agents, and will also support Microsoft 365 Copilot. This helps application teams deploy and run AI services faster and at larger scale.

Microsoft’s integrated model, which combines chips, AI models, and applications, helps solve system-level performance and efficiency gaps. By operating some of the world’s most demanding AI workloads, Microsoft can tightly align chip design, model development, and application-level optimization.

Alongside Maia 200, Microsoft is previewing the Maia Software Development Kit. The SDK supports common AI frameworks and helps developers optimize their models for deployment on Maia systems. It includes a Triton compiler, PyTorch support, NPL programming, a simulator, and a cost calculator, giving developers tools to adapt models and manage deployment cost.