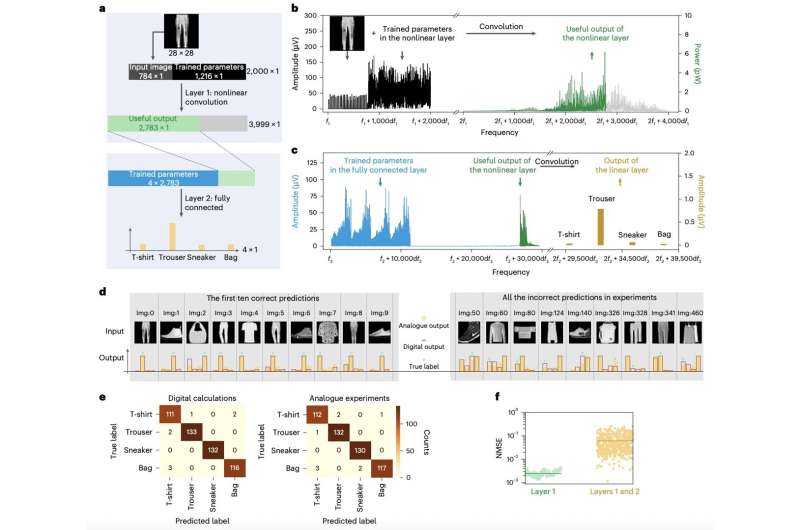

A new approach encodes data across multiple frequencies in a single analog device, enabling energy-efficient, high-accuracy AI hardware without adding more physical components.

Researchers have unveiled an approach to scaling analog computing systems using a synthetic frequency domain, a technique that could enable compact, energy-efficient AI hardware without adding more physical components. The method, developed by teams at Virginia Tech, Oak Ridge National Laboratory, and the University of Texas at Dallas.

Analog computers encode information as continuous quantities—like voltage, vibrations, or frequency—rather than digital 0s and 1s. While inherently more energy-efficient than digital systems, analog platforms face challenges when scaled: components can behave inconsistently across larger arrays, leading to errors. The new synthetic-domain strategy circumvents this by encoding data across different frequencies within a single device, minimizing device-to-device variation.

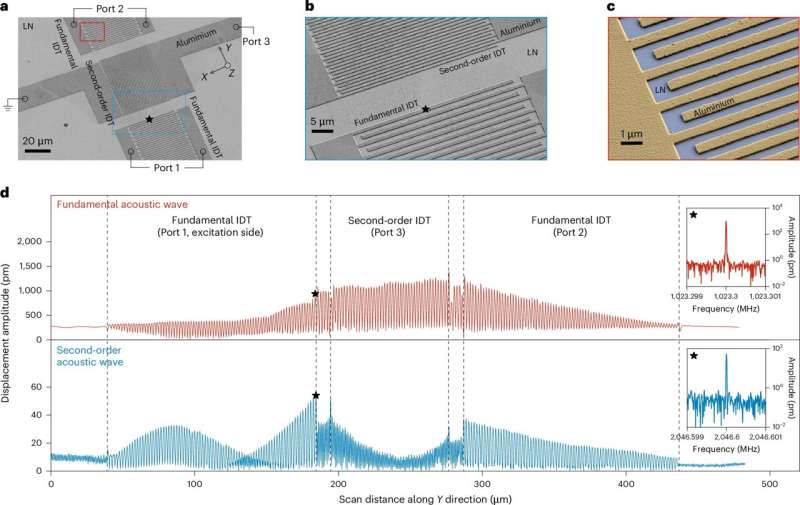

At the core of the approach is a lithium niobate integrated nonlinear phononic device, which leverages acoustic-wave physics to perform operations such as matrix multiplication. By representing a 16×16 data matrix on one device, the system avoids the need for multiple components typically required in conventional analog computing upscaling.

Initial demonstrations showed the platform successfully classifying data into four categories using a single or minimal number of devices, highlighting the potential for early-stage R&D applications where hardware availability is limited. The method could extend to emerging devices across different computing modalities, offering a pathway to reliable analog AI accelerators.

Beyond classification tasks, the researchers are exploring scaling the platform to handle larger neural networks and more complex computations. The combination of energy efficiency, compact form factor, and high accuracy positions synthetic-domain analog computing as a promising route for next-generation AI hardware, particularly in applications where conventional digital systems face energy or size constraints.

With this approach, analog computing could finally bridge the gap between lab-scale prototypes and scalable, practical systems, opening doors for AI-specific processors that leverage physics-based computation rather than purely electronic logic. “The synthetic domain approach allows us to implement physical neural networks (PNNs) using only a few acoustic-wave devices,co-designing the neural network with the hardware significantly boosts accuracy—in our case, achieving 98.2% on a classification task” said Linbo Shao, the study’s senior author.