User interface (UI) design problem: Too many steps to do something. Engineers have been enabling advances here by moving from physical keys, switches and knobs to resistive touchscreens and later to capacitive screens that accept multi-touch inputs, swipes and other gestures. Also seen are various wearable controllers and vision based systems that let you control electronics without laying a finger on a physical device.

It is now six years since Microsoft disrupted gaming with optical sensors in its Kinect, and gesture technology looks ready to take a leap in 2016.

Swiping your way through screens

It all starts with recognising touch, swipes and gestures. GestureTek is a company that has been into 3D video gesture control since the early 2000s. They have been keeping up with display technology, too, and now offer 4K displays with multi-touch enabled on these. Their multi-touch tables offer up to 32 touch points on 213cm displays.

They also have GestTrack3D, a patented 3D gesture-control system for developers, original equipment manufacturers and public-display providers. Their software development kit comes with a set of one-handed and two-handed gestures in the library.

Of course, sometimes you do not have the budget to swap out existing displays for newer ones. In such cases, DISPLAX Skin Multitouch is a patent-pending technology that lets users turn non-conductive flat surfaces into multi-touch surfaces that are liquid and ultraviolet rays resistant.

DISPLAX features a proprietary noise-cancelling technology called XTR-Shield that helps reduce the gap between the sensor and the display, which also helps remove parallax. It uses a projected capacitive technology and can handle up to 20 simultaneous touches with a relative accuracy of one millimetre.

PQ Labs G4 overlay is another component with a similar use case that can be fixed in front of a television or monitor to change it into a multi-touch display.

The latest in the line up is probably French Bixi, a portable gesture device. It is a combination of Gorilla Glass and Bluetooth Low Energy (BLE) with optical sensing that can detect eight gestures.

Wearables and gesture recognition

Seen as the easiest way to break the stranglehold of keyboards and mice, gesture control through gloves is a promising area. Apotact Labs recently brought out a glove called Gest, which uses skeletal models and motion-processed data to sense gestures.

Essentially a glove-like controller, it comes with a software development kit (SDK) that allows engineers to build new applications for the platform. The glove packs 15 sensors including accelerometers, gyroscopes, magnetometers and more. The vendor claims that their software is smart enough to learn how you use your hands and uses that to create a personalised model.

Alternatively, as a more portable solution, Thalmic Labs brought out Myoarmband, which measures electrical activity in the forearm muscle to make sense of gestures. It lets you control drones or integrate these with Oculus Rift. However, the unique factor here is also its weakness, as there are many reports that the product is not ready for primetime yet.

Aria Wearable is more of a watch-like device from Deus Ex Technology, which will let you interact with electronics through simple finger gestures.

Funded by Menlo Ventures and Sequoia Capital, Nod probably uses the smallest form factor for a wearable gesture controller. It is a BLE-enabled gesture-control ring. It comes with highly-sensitive sensors built into the hardware that allows developers and engineers to make use of up to 12,600-dots-per-centimetre (32,000dpi) sensitivity.

Gesture-control rings have been in the works for a long time, including ones that did not really take off. Now we have Ring Zero from the firm Logbar. It is a product that was first seen at Kickstarter but is now available to all. Developers can access Ring SDK for iOS, but the one for Android is still a work-in-progress. The drawback here is that it requires the user to wear the sensing instrument.

Gesture-control rings have been in the works for a long time, including ones that did not really take off. Now we have Ring Zero from the firm Logbar. It is a product that was first seen at Kickstarter but is now available to all. Developers can access Ring SDK for iOS, but the one for Android is still a work-in-progress. The drawback here is that it requires the user to wear the sensing instrument.

Just smile and wave

3Gear Systems is a start-up pursuing the vision that gesture control should be implemented without having to touch any electronics in the first place. They are pursuing that vision through vision-enabled gesture recognition powered by a tiny camera-controlled device.

Leap Motion is another company into this same space, but they offer a gesture-recognition solution in a box. Their device can be used with devices like laptops and even Oculus Rift. It works by using a mix of two cameras and three infrared light emitting diodes (LEDs). The cameras track light from the LEDs at a wavelength of 850 nanometers. This wavelength is outside the visible spectrum, so users cannot see it at all. Once this piece of hardware gets data, it passes it to Leap Motion tracking software that does all data crunching.

ArcSoft’s technology enables gesture recognition on devices having stereoscopic or even single-lens devices. This enables using natural hand gestures as well as face, eye and finger motions to interact with devices. ArcSoft claims that their capabilities for hand gestures are highly accurate, detecting clear gestures at five metres even under low-light or back-lighting conditions.

Another example is Seoul based VTOUCH, which lets users control devices by tracking their eyes and fingers using a 3D camera.

AMD, too, is interested in this area, probably to strengthen the market for their accelerated processing units. AMD Gesture Control software is freely available, which allows users to utilise cameras such as their devices’ built-in cameras as gesture-recognition tools. The company claims it delivers good accuracy in low-light conditions.

TedCas is a firm that specialises in building touch-free natural UI based medical environments. They focus on the use of optoelectronic devices to deliver intuitive interactions as a way to prevent contact infections. One of their products is called TedCube, which is a small device that can connect to any gesture-control sensor to translate gestures into generic keyboard and mouse commands. TedCube can be connected to any system or equipment to control it.

TedCas is a firm that specialises in building touch-free natural UI based medical environments. They focus on the use of optoelectronic devices to deliver intuitive interactions as a way to prevent contact infections. One of their products is called TedCube, which is a small device that can connect to any gesture-control sensor to translate gestures into generic keyboard and mouse commands. TedCube can be connected to any system or equipment to control it.

Gesture-development platforms

Soft Kinetic’s iisu is a platform for natural gesture development and deployment, which enables full-body skeleton tracking as well as precise hand and finger tracking. It also supports legacy cameras such as the original Kinect, Asus Xtion, Mesa SR 4000, Panasonic D-Imager and, of course, Soft Kinetic DepthSense 311 and DepthSense 325 (aka Creative Senz3D) as well as Flash, Unity, C# and C++ environments.

They are also making available their DS536A module for prototyping and development to select customers. DS536A module combines a time-of-flight sensor and diffused laser illumination and a 3-axis accelerometer. It has a lower resolution and narrower field-of-view, and has been available since September 2015.

OPT8140 from Texas Instruments is a time-of-flight sensor. It can be seen in DepthSense 325 camera as well as Creative Senz3D camera.

MLX75023 sensor, featuring Soft Kinetic technology and manufactured in Melexis’ automotive-grade complementary metal oxide semiconductor mixed-signal process, is claimed to be the highest-resolution 3D sensor available for automobile safety and infotainment markets.

OnTheGo Platforms is a firm that built a gesture-recognition system that can work on any mobile device with a standard camera.

Atheer is another firm that has a similar system but relies on infrared light. Interestingly, Atheer acquired OnTheGo so that a combination of their technologies could allow them to bring out a much more capable product.

Sony has SmartEyeGlass available for developers and they aim to tap the augmented reality segment.

Eyefluence is a company that recently came out of stealth with a ` 950 million funding. They have created a unique eye-tracking system that can understand gestures from the eyes in real-time.

What is up next

An Apple insider claims that the company has a new patent for 3D gesture control. The technology lets a computer identify hand motions made by a user. More interestingly, it can also learn gestures such that it can spot these even if part of the gesture is blocked from the camera.

This could enable a much more consumer-friendly response where gestures made in non-ideal conditions will still be detected and acted upon. If implemented perfectly, it could be as significant as the touch response that the original iPhone delivered in a time of frustrating touch-enabled devices.

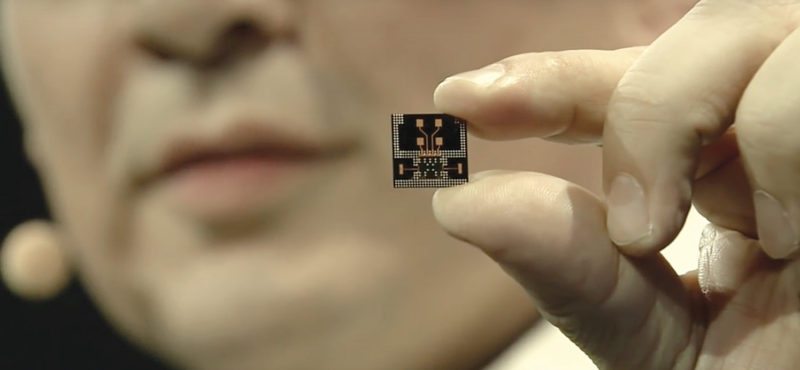

Sensing through materials is something that cameras and vision cannot manage by themselves. Google seems to have taken a lead here with their Advanced Technologies and Projects (ATAP) project. Named Project Soli, this looks like the first realistic solution here.

Google’s solution runs at 60 gigahertz to capture anywhere between 3000 frames per second and 10,000 frames per second. A device made from a solution like this can measure the motion of the finger and hands in free space with so much accuracy that even rubbing fingers together would be detected with precision.

Dilin Anand is a senior assistant editor at EFY. He is B.Tech from University of Calicut, currently pursuing MBA from Christ University, Bengaluru