With the tsunami of data in every application, a highly efficient data storage drive is going to be the de facto choice for all future gadgets starting from mobiles, laptops, and mini/microservers to high-performance computing systems.

Non-volatile memory express (NVMe)-based solid-state drive (SSD) is a feature-rich device that can be exploited for future scaling needs via a peripheral component interconnect express (PCIe) bus for maximum throughput and lower latency. The recent trend of a further reduction in latency for hyperconvergence of servers in high-performance computing (HPC) systems increases its potential to be the ubiquitous candidate of the future.

Solid-State Drive storage and its inherent advantages

Before going into the details of what factors are in favour of SSD devices to be the most probable candidate of the future, we should first take a look at the importance of SSD as a storage device.

An SSD’s faster boot speeds and orders of magnitude increase in the read/write speeds benefit an individual or an enterprise in saving time and improving productivity. The newer applications like streaming 4K/8K videos and the use case of 5G/6G communications will find SSD a better match to address the bottlenecks as a storage partner in the essential chain of computer+network+storage. A data-centre-ready/cloud-ready infrastructure can help transform the economics for maintaining such a converged infrastructure. The following are three major components in revolutionising the future server farms:

Computing server farms

Over the years, we have experienced the explosion of data that may be further fuelled by technological revolutions such as the Internet of Things (IoT), artificial intelligence (AI), blockchain, autonomous driving, 4K/8K video streaming, 5G networks, etc. This has led to significant advancements in computing. To further reduce the latency in the cluster of computing cores, PCIe controller, network-on-chip (NOC), and the new standards like Compute Express Link (CXL) are being used. With the data-intensive advanced applications like AI and camera interface with HD videos, the load on the Internet has been increasing.

Of late, edge computing has become a prominent accelerator for AI processing and ML by reducing the amount of data transfer on the net. This has been possible by in-memory computing or analysing the data through in-built analysers attached to the storage devices and computational storage drives (CSD).

Networking

The demand for the network bandwidth continues to grow exponentially with emerging applications like AI, high-bandwidth 5G use cases, ML, IoT, and edge computing applications. Huge demand for throughput with low latency is being addressed with various Physical Media Architectures of SerDes (Serialiser and Deserialiser), which is a cornerstone block for high-speed serial links.

The data can be transferred over optical/electrical cables or short PCB tracks. Various standard bodies are working on these SerDes specifications to support the ever-increasing demand for higher bandwidth and lower latency of the data centres, cloud and edge computing, 5G, AI, and ML applications.

Storage

The evolution of storage devices over the past seven decades witnessed continued miniaturisation of the storage drives, from a wardrobe sized drive that contains a few megabytes of data to a single-chip device that contains a few terabytes of data. The advancements in technology have helped to move from magnetic tapes to hard disk drives to NVMe-based SSDs. The storage elements have moved from magnetic devices/ferroelectrics to semiconductor elements, and the new one being flash memory devices with multi-level memory devices.

Advantages of NVMe

NVMe is the prominent interface to the high-speed storage. PCIe interface that supports NVMe has become the default choice for building the Solid-State Drive storage. This module consisting of PCIe bus, NVMe controller, and flash storage memories has distinguished advantages over many of the other storage systems.

The latency challenges are being optimised at various stages of HPC systems through emerging standards like CXL that include on the chip, chip to chip, boards to boards, and rack to rack within and across data centres.

To increase the throughput, the most prominent across the interfaces of many applications is PCIe, which is coming up with higher data rates for a given channel, and the latest upcoming specification will target 64Gbps per lane. This will help increase the throughput at the host site and the communication between the host and chip with a native controller on the chip. The PCIe, which supports as a bridge between the CPU and memory through the NVMe controller, has been the natural choice for building the future converged systems with integrated storage solutions.

Having said about the main features and the storage solutions that are emerging, we can address the demands of large storage systems comprising the SSD storage device.

The emerging systems and the complex applications draw the attention of specific features of the SSD-based storage solutions for further reducing the latency in applications like ML, addressing the security at hardware and software levels, reducing the throughput requirements between CPU and storage, distributed storage over the fabric, semi-processed data near storage, etc.

In other words, moving the data away from computation helps in saving the bandwidth, power, processing the metadata, security, and reducing latency.

Having a camera interface. Interfacing camera controllers to the storage device and transferring/analysing the data through a PCIe bus that seamlessly connects within the accelerators for graphics/images enhances the system performance by reducing the workload at the main processors and eases the data transfer between storage and computing.

Also, the recent emergence of U.2, M.2, and EDSFF form factors further enhance the potential usage of NVMe-based SSD devices for all applications.

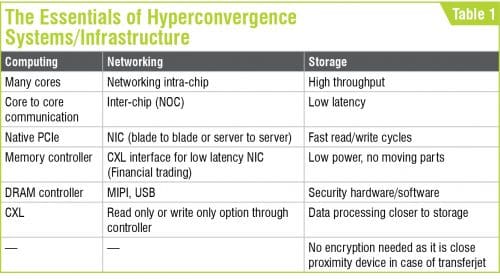

The table above gives the different elements and options that can form the enterprise computing systems of the future.

A careful look at the constituents of the future high-performance computing (HPC) systems infers that the thin line between the compute to networking, networking to storage, and computing to storage is diminishing.

In reducing the latency across the ingredients of the hyperconverged systems, the NVMe-based flash storage systems play a critical role and emerge as the choice for all the storage solutions. The inherent advantages of the PCIe interface have become the de facto choice in the hyperconverged system.

To add to this, the NVMe controller has the adaptability to Solid-State Drive architecture with the latest interface, memory controllers, advances in flash cells, and native controllers in CPUs. On top of all these, the controller is critical for customising for the umpteen number of applications that can be envisaged.

With the tsunami of data in every application, a highly efficient data storage drive is going to be the de facto choice for all the future gadgets and technologies like mobiles, laptops, HPC systems, edge computing, AI, ML, single board computing, car infotainment, and so on.

Dr Sankar Reddy has served as a faculty member at the Indian Institute of Science for fifteen years. He has built teams from scratch, nurtured, and grew them to world-class delivery teams in a multi-gigabit data rate. He founded Terminus Circuits ten years back