AI’s heavy dependence on Nvidia GPUs is becoming economically, environmentally, and geopolitically unsustainable. As power consumption soars and access to high-end GPUs tightens, the industry is exploring alternatives. Hyperscalers are building custom AI chips, startups are rethinking AI hardware from scratch, and a growing movement is proving that AI inference can run efficiently on standard CPUs. Together, these shifts point to a more decentralised, affordable, and sustainable future for AI computing.

To the novice, it might seem as if artificial intelligence (AI) is a magical genie that gives you everything you ask for with the snap of a finger, but techies know that a lot of muscle power (read graphics processing units, or GPUs) goes into making it all work.

The tech infrastructure required for training and deploying AI systems is so vast that it may not even be sustainable in the future.

Environmentalists are already very concerned about the widespread use of GPUs for AI, as it guzzles power, increases carbon dioxide emissions, and uses alarming amounts of water to cool data centres.

Also, the volatile geopolitical landscape of today is putting GPUs out of reach of many countries, thereby causing an imbalance in the concentration of tech power.

Today, when we refer to GPUs in the AI context, it invariably means Nvidia’s GPUs, such as the A100, H100, and GB200. Tensor core accelerators that speed up matrix operations, and an effective parallel computing platform and programming model called CUDA (compute unified device architecture), have made Nvidia GPUs the prime choice for training large-scale AI models.

The latest from their stables is the GB200 series of superchips, which bring together Blackwell GPUs and Grace CPUs into a core, enabling massive generative AI (GenAI) workloads like training and real-time inference of trillion-parameter large-language models (LLMs). Market studies estimate that Nvidia holds more than 90% of the discrete GPU market today.

Table of Contents

Over-dependence on Nvidia GPUs

Despite Nvidia’s GPUs being technically superior, the industry and academia are working hard today to reduce the dependence of AI models on these powerful GPUs. One obvious reason is the export restrictions imposed by the US government. There is limited or no supply of H100, A100, and GB200 to many countries; and most others must be content with less capable cousins like the H20, or the GTX and RTX series GPUs.

There is also the cost aspect. These are expensive GPUs, and not everyone can afford them! From OpenAI and Google to xAI and Anthropic, all the AI majors are using thousands of Nvidia GPUs and waiting in line for more, so why would the price ever come down? Then, there is the question of supply vis-à-vis the burgeoning demand.

Finally, there is the huge issue of sustainability. Large data centres populated with powerful GPUs are a major environmental concern—the power consumed, the emissions, the e-waste generated, and of course the resources used in their manufacture.

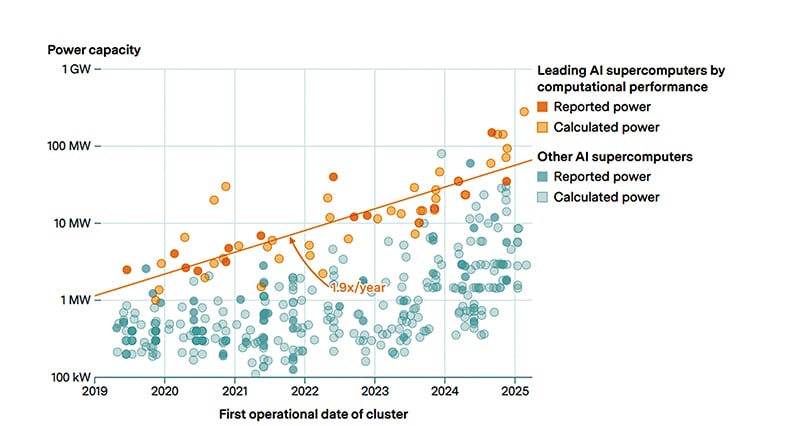

Picture this. According to an X post by Elon Musk in July this year, xAI’s flagship supercomputer Colossus 1 is now live, training their Grok AI model. It runs on 230,000 GPUs, which include 30,000 GB200s. Coming very soon is Colossus 2, with 550,000 GPUs, mainly comprising GB200s and GB300s. And the roadmap goes up to 1 million GPUs, which means an investment of a few trillion dollars! According to a report by Epoch AI, Colossus consumes around 280 megawatts of power; which some sustainability reports claim can power more than a quarter million households! Epoch AI’s chart also shows that the power requirements of leading AI supercomputers are doubling every 13 months.

Let us take a quick look at OpenAI’s ChatGPT lest they feel offended. According to reports on the internet, it took approximately 1287 megawatt-hours of electricity to train GPT-3. The daily operation of ChatGPT is estimated to consume around 39.98 million kilowatt-hours. Research indicates that an average interaction with GPT-3, such as a short conversation with the user or drafting a 100-word email, may use around 500ml water to cool the data centre.

We are, arguably, heading towards an apocalypse, thanks to AI technology!

Taking the bull by its two horns

Now, there is a two-pronged problem that the AI industry worldwide is trying to solve. The first challenge is to reduce the dependency on Nvidia. Let us face the reality—many do not have access to it, some cannot afford it, and others do not want to wait in line! In response, some companies are working on developing customised GPUs for their specific needs. And some countries, including India, are encouraging the development of indigenous GPUs.

The second challenge is to find means to reduce the amount of computing power required by AI models—either work without GPUs, like Kompact AI, or reduce the number of GPUs used, like DeepSeek. This will have a positive economic and environmental impact.

Now, let us take a top-level look at a few ongoing efforts from around the world that attempt to address one or both of the above challenges.

Tech majors developing in-house AI chips