The new AI model enables autonomous robots to plan paths from just one image reshaping perception and electronics-powered systems.

Researchers at the Skolkovo Institute of Science and Technology have developed a breakthrough generative AI model that lets robots determine safe navigation paths using only a single image, eliminating reliance on heavy mapping sensors and complex traditional algorithms. The new system, called SwarmDiffusion, applies a lightweight diffusion-based model to rapidly infer where a robot should move and how to avoid obstacles from one 2D snapshot. This marks a significant shift from conventional robotics, which typically require detailed 3D maps from LIDAR, radar or multiple cameras followed by computationally heavy path planning.

According to the researchers, the conventional approach works but is slow and resource-intensive, often needing terabytes of map data and high-power processors. In contrast, SwarmDiffusion builds an internal representation of traversable and risky areas directly from the input image and generates motion trajectories in milliseconds.

SwarmDiffusion combines two core components: a traversability predictor that uses a frozen visual encoder paired with features distilled from large vision-language models (VLMs), and a diffusion-based trajectory generation module that gradually refines a probable path toward the goal while avoiding hazards. The method produces feasible routes without detailed environmental reconstructions, a first for many robotic platforms.

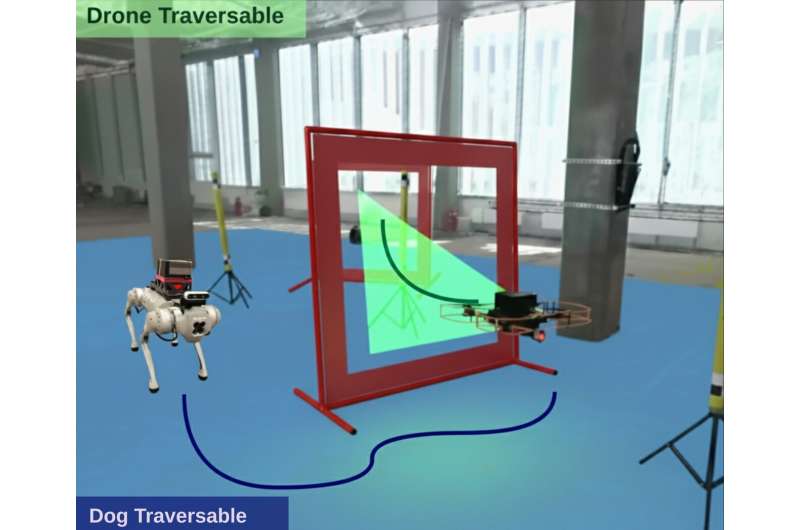

Tests show the model works across varied platforms, including legged robots and aerial drones, suggesting broad applicability in real-world scenarios where robots must operate in dynamic or unfamiliar settings. In trials, SwarmDiffusion reliably computed safe movement plans within about 90 milliseconds speed and simplicity far beyond standard robotics stacks.

By removing the need for detailed maps and extensive onboard sensors, this approach could slash electronics and compute requirements for autonomous machines while expanding their usefulness in environments like warehouses, industrial facilities, agriculture and search and rescue missions. The team envisions future upgrades where a single model could guide groups of heterogeneous robots simultaneously, enabling collaborative missions and further reducing hardware complexity.