The article aims to guide and inspire beginners and professionals to build sustainable global careers in AI, IoT, and embedded systems by understanding technology evolution, mastering fundamentals, and embracing continuous learning in an ethical, practical way. Abhijeet Desai from HCLTech America drew a roadmap in his engaging session at the EFY Expo in Pune.

Artificial intelligence (AI) has evolved significantly from early concepts to today’s generative tools. By 2035, over 75 billion devices are projected to be connected globally, underscoring the explosive growth of the Internet of Things (IoT). In parallel, the AI market is expected to reach $2 trillion by 2030.

These estimates are based on insights from respected firms like McKinsey, which derive their findings from extensive interviews with global business leaders—CTOs, CIOs, CFOs, and CEOs—across various industries.

To grasp AI’s trajectory, it helps to consider its impact across three timeframes: short, medium, and long term. In the short term, AI will drive productivity—much like automation, computing, and software did in previous decades. The medium term will focus on complete automation and sharper efficiency. In the long term, AI will introduce greater complexity and fuel demand for increasingly intelligent systems. Tools like ChatGPT may seem complex today, but they will likely continue to grow more advanced.

But, despite concerns, AI is not here to replace all jobs. Instead, it is prompting companies like Microsoft, IBM, and Google to restructure and invest in AI infrastructure and talent. This is part of the natural business cycle.

Smart devices are becoming ubiquitous, ranging from intelligent shoes to showers. Hardware intelligence and embedded systems are evolving rapidly, supported by technologies such as augmented reality (AR), chip design, and advanced manufacturing. In China, there is a hospital that operates entirely with robots, eliminating the need for human intervention. In the US, banks like Bank of America are reducing the number of physical branches due to AI-driven innovations.

Real-time systems and edge computing are also transforming development. Instead of sending all data to the cloud, processing at the edge saves time and resources. Business logic and data remain local, with only critical, aggregated insights transmitted to the cloud.

The demand for quick, cost-effective innovation has made edge computing and smart IoT essential in Industry 4.0. As roles evolve, continuous learning remains key. It is essential to remain curious and adapt to the evolving technological landscape.

The journey of 75 years…

The timeline of AI begins in 1950. Although the pioneers of that era could not have imagined the advances seen today, their work laid the foundation for what exists in 2025—including tools like ChatGPT. Where it goes in the next 75 years remains to be seen. But one thing is certain: evolution is constant, and change is inevitable.

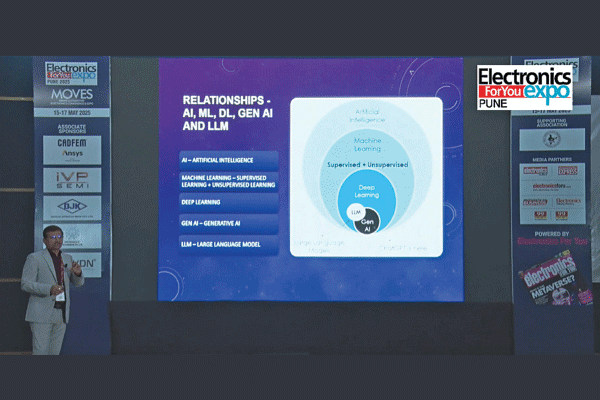

Many of us may have seen the familiar AI timeline diagram across platforms. At its core, AI is software designed to mimic the human brain. Machine learning is a subset of AI, with deep learning as a further subset. Within deep learning lies generative AI, where large language models (LLMs) represent the most significant current development.

Over the last decade, the evolution of generative adversarial networks (GANs) has been remarkable. GANs have played a crucial role in enabling the creation of LLMs. The technology has progressed rapidly since 2013, driven by breakthroughs in deep learning.

Three key concepts must be remembered as the field progresses: generative adversarial networks, large language models, and transformers. A well-known research paper by Google, titled Attention is All You Need, laid the foundation for transformer models—technologies that continue to shape the future of AI.

What is Generative AI