New memristor-based artificial neurons emit light to enable denser, more efficient 3D AI chips, pointing to a future beyond power-hungry GPUs.

Researchers have demonstrated a new class of light-emitting artificial neurons that could reshape how AI hardware is built and deployed, addressing the energy and scaling limitations that plague today’s deep learning systems. The innovation, detailed in Nature Electronics, comes from a collaboration between the Hong Kong University of Science and Technology, ETH Zurich and Université de Bourgogne Europe.

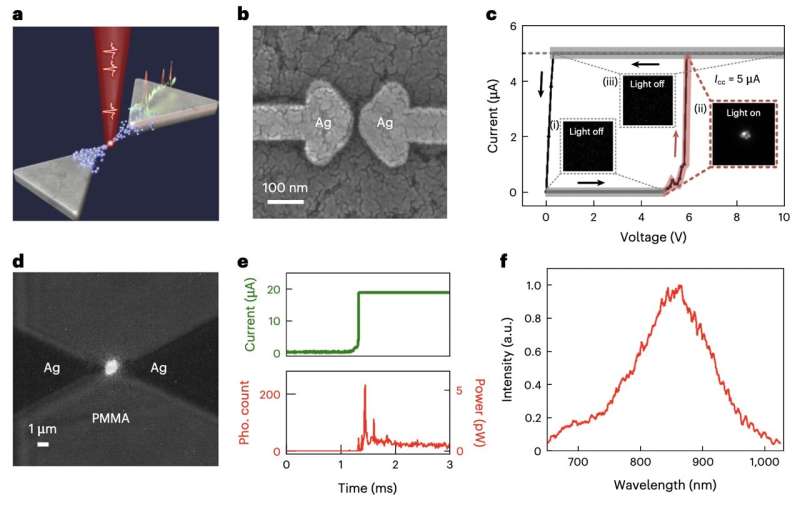

At the core of this advance are memristive blinking neurons, nanoscale devices made from silver and an insulating polymer that not only integrate electrical signals but also emit light pulses when activated. Unlike conventional AI chips that rely on dense wiring and bulky circuits to shuttle data between components, these neurons use light for communication, eliminating many wiring constraints and enabling three-dimensional, wire-free neural structures.

This shift to photonically linked networks is significant because light can travel between neurons without electrical connections, reducing both energy waste and physical space. The result is a path toward compact, high-density AI hardware architectures that scale in volume rather than footprint, a contrast to today’s planar, complementary metal–oxide–semiconductor (CMOS) designs.

In early tests, networks built from these light-emitting neurons have shown promising performance. One three-dimensional spiking neural network achieved roughly 91.5 % accuracy on a speech-classification task drawn from Google’s public dataset, while another dense array clocked about 92.3 % accuracy on handwritten digit recognition (MNIST) benchmarks that demonstrate practical viability.

The implications extend across electronics and AI: by enabling energy-efficient computation, reducing reliance on power-hungry GPUs, and allowing true 3D neural stacking, this hardware could underpin next-generation edge AI and neuromorphic systems. While challenges remain including integrating these devices with existing manufacturing processes and expanding task scopes, the work represents an intriguing step toward brain-inspired computing that’s both power-lean and spatially compact.