A new white paper explores how high-voltage DC architecture and wide bandgap semiconductors are transforming the efficiency, scalability, and sustainability of next-generation AI data centers.

The semiconductor industry is undergoing a major transformation as data centers scale up to meet the power demands of AI infrastructure. A new white paper by ROHM details the emergence of the 800V DC architecture—a high-efficiency, gigawatt-scale power delivery system designed to replace conventional 48V and 12V setups now hitting their operational limits.

At the heart of this shift is a rethinking of power distribution. In traditional systems, AC-DC conversion occurs within each server rack. The new 800V model moves this conversion to centralized power racks, streamlining delivery to IT racks through higher voltage lines. This approach drastically reduces transmission losses, improves energy density, and boosts system reliability—critical factors for AI factories running vast GPU clusters.

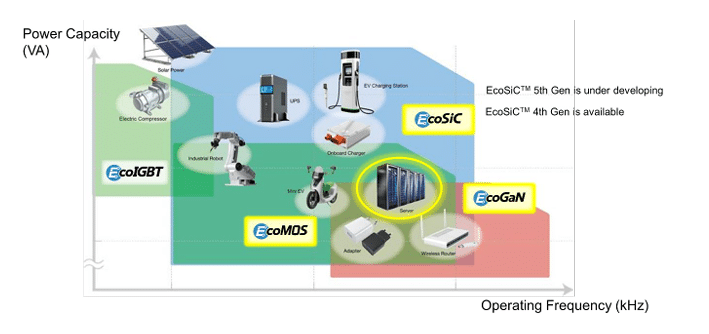

Wide bandgap semiconductors, particularly silicon carbide (SiC) and gallium nitride (GaN), play a pivotal role in realizing these benefits. Their superior efficiency and high-frequency performance enable smaller, cooler, and more compact systems. The paper highlights how SiC-based EcoSiC™ devices and GaN-based EcoGaN™ solutions achieve ultra-fast switching and high power density. Complementary analog ICs with Nano Pulse Control™ technology further enhance gate drive stability and switching precision.

As AI models scale toward multi-gigawatt data center footprints, power systems must evolve for sustainability and scalability. The 800V architecture delivers a compelling path forward, offering better performance per watt while reducing operational costs and component count.

The roadmap reflects a broader industry collaboration involving major chipmakers, power system designers, and cloud operators. Ongoing partnerships with technology leaders—including joint efforts initiated in 2022—are accelerating adoption of SiC and GaN power solutions for next-generation computing.

The white paper’s simulations and case studies illustrate how optimized conversion topologies and thermal designs can push data center efficiency beyond today’s benchmarks, setting a new standard for AI infrastructure design in the era of electrified intelligence.