Learn how large language models can execute locally on older PCs using CPU based inference while maintaining data privacy and offline operation.

As large language models continue to grow in size, their deployment has become tightly coupled with cloud infrastructure, high-end GPUs and constant internet connectivity. This reliance limits accessibility, increases cost and raises concerns around energy use and data privacy. Running large-scale AI locally, especially on older hardware, has long been considered impractical.

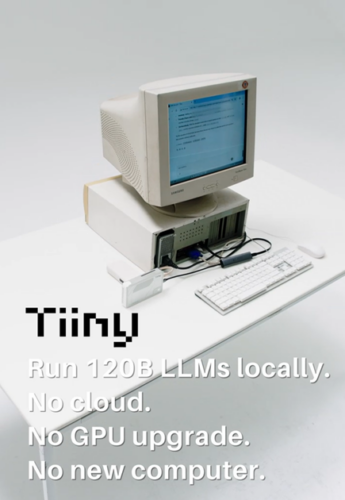

Addressing this challenge, Tiiny AI has demonstrated a 120-billion-parameter large language model running fully offline on a 14-year-old consumer PC. The demonstration shows the model operating without internet access, cloud servers or GPUs, marking the first known instance of a model at this scale executing locally on legacy hardware. The system connects to a 2011 PC powered by an Intel Core i3-530 processor with 2GB DDR3 memory and operates through a pocket-sized personal AI supercomputer.

The demonstration was recorded in a single uninterrupted take, showing the model performing reasoning and analytical tasks locally at speeds approaching 20 tokens per second. The system remains completely offline, with no network connectivity enabled. The capability is enabled through proprietary techniques such as neuron-level sparse activation and a heterogeneous inference engine that distributes workloads across CPU and NPU resources, allowing large models to run at significantly lower power levels than traditional GPU-based systems.

Key features of the device include:

- 120B-parameter LLM running fully offline

- No internet, cloud infrastructure or GPU required

- Legacy host system with Intel Core i3-530 and 2GB RAM

- Sustained generation speeds near 20 tokens per second

- Power-efficient operation within a 65W system envelope

- Support for on-device inference with full data privacy

Samar Bhoj, Director of GTM says, “We are showing that large-model intelligence no longer needs massive GPU clusters or cloud infrastructure. With Tiiny AI Pocket Lab, advanced AI can run privately, offline and on everyday hardware, even a 14-year-old PC. The future of AI belongs to people, not data centres.”