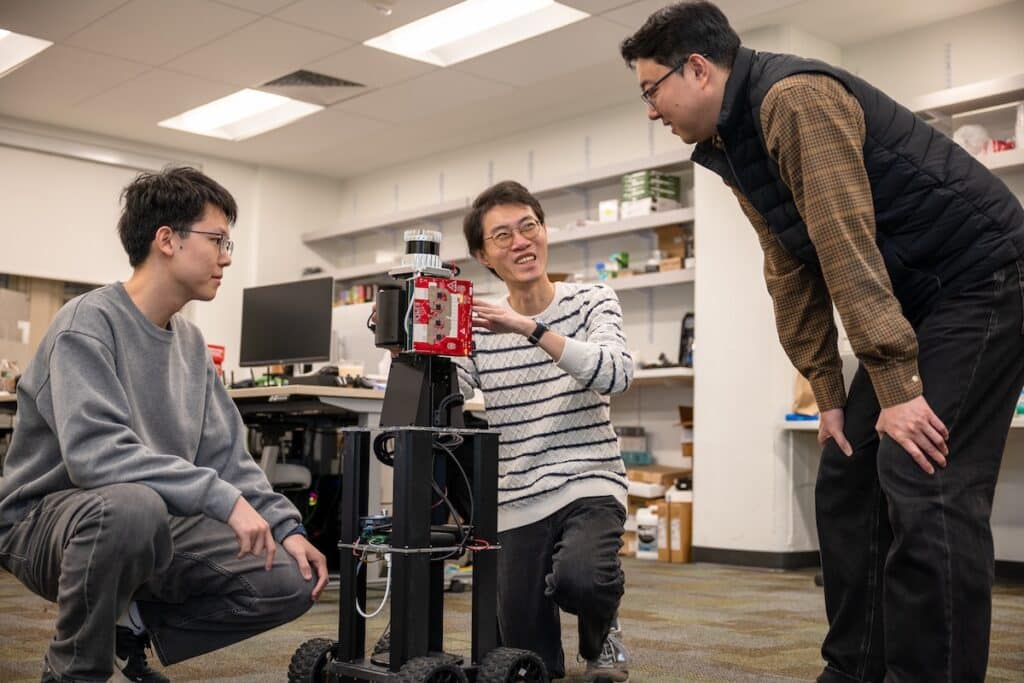

Designed for real world deployment, HoloRadar complements LiDAR by providing holographic radio sensing beyond conventional line of sight.

Researchers at the University of Pennsylvania have developed HoloRadar, a radio-based sensing system that enables robots to reconstruct three-dimensional scenes beyond their direct line of sight. By processing reflected radio waves with artificial intelligence, the system allows machines to detect hidden objects, such as pedestrians or obstacles positioned around corners.

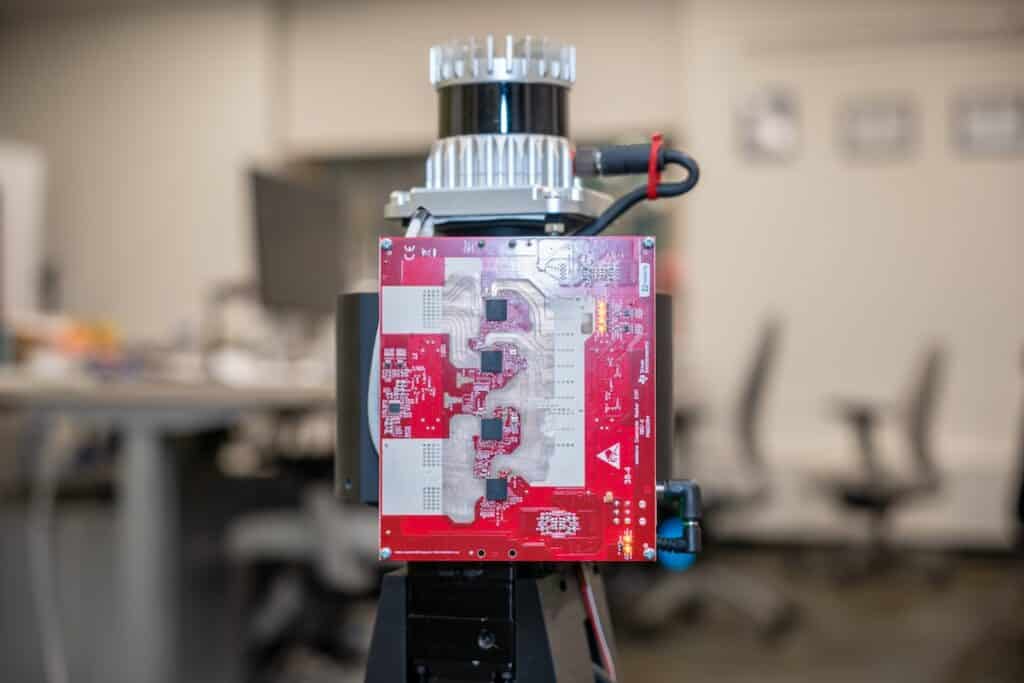

Unlike non-line-of-sight techniques that depend on visible light, the technology functions in darkness and under changing lighting conditions. It relies on radio waves, which have much longer wavelengths than visible light. Although longer wavelengths are typically seen as limiting image resolution, the researchers leveraged this property as an advantage. Because radio waves are far larger than the microscopic surface variations on walls, floors and ceilings, those flat surfaces reflect signals in predictable ways, effectively acting as mirrors.

By capturing and analyzing these reflections, the system reconstructs environments outside a robot’s direct field of view. The idea is comparable to how drivers use mirrors at blind intersections, except here the surrounding environment itself becomes a reflective network.

Designed for real-world deployment, the platform runs in real time on a mobile robot and does not require controlled lighting. Earlier radio-based systems often depended on bulky, slow scanning equipment, restricting practical use. This approach is compact and mobile, and it is intended to complement existing sensors rather than replace them. For instance, autonomous vehicles commonly use LiDAR to detect objects directly ahead; this system extends perception beyond that visible range, giving machines more time to react.

Interpreting the reflections presents a technical challenge, as a single radio pulse can bounce multiple times before returning to the sensor. To solve this, the team developed a two-stage AI framework that combines machine learning with physics-based modeling. The first stage enhances raw radio signals and separates overlapping returns. The second stage traces those reflections backward, reversing mirror-like effects to reconstruct the true 3D scene.

“This is an important step toward giving robots a more complete understanding of their surroundings,” said Mingmin Zhao, Assistant Professor in Computer and Information Science and senior author of the study. “Our long-term goal is to enable machines to operate safely and intelligently in the dynamic and complex environments humans navigate every day.”