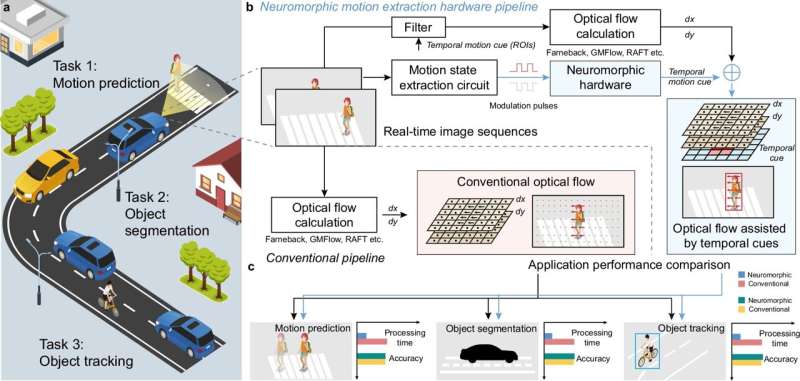

New neuromorphic motion-detection hardware slashes processing delays in robots and autonomous vehicles, promising faster reactions and safer navigation.

Robotics and autonomous systems may soon see a big leap in how quickly they understand and react to motion. Researchers have developed a bio-inspired neuromorphic chip that mimics human visual attention to speed up motion perception, potentially improving response times for robots, self-driving cars, drones and other autonomous platforms.

At the core of the innovation is a hardware-level filter that emulates how human vision prioritises changes in brightness and movement rather than processing whole visual scenes. By integrating a 4×4 array of specialised transistors, the chip identifies areas of interest in a scene and sends only those to the main processor. This reduces the data pipeline and accelerates visual processing substantially.

In controlled tests, the chip cut visual processing times to about 150 milliseconds, roughly matching human perceptual speed and four times faster than leading conventional algorithms. That improvement is significant: even half-second delays in existing systems can translate into many metres of unreacted travel for a car or slower responses by a robot in dynamic environments.

Beyond speed, the system also demonstrated marked performance gains in real-world tasks. In vehicle trials, perception and motion analyses improved by more than 200 %, while drone navigation decisions became notably faster. A robotic arm equipped with the chip achieved over 700 % better success in grasping fast-moving objects.

Unlike many AI vision approaches that depend heavily on software running on general-purpose processors, this approach emphasises dedicated neuromorphic hardware. By doing so, it avoids the computational complexity that slows down traditional computer vision stacks, making it especially attractive for time-sensitive applications such as collision avoidance and dynamic obstacle tracking.

The research team’s next step is scaling the technology from prototype to production-grade chips that could be integrated directly into autonomous cars and industrial robots. If successful, this could help bridge the gap between humanlike perceptual speeds and machines’ real-world reaction times, reinforcing safer and more responsive autonomous systems.