MIT researchers have created a speech-to-reality system that lets users speak an object into existence, turning natural language into 3D designs and robotic assembly that fabricates stools, shelves, and more in minutes.

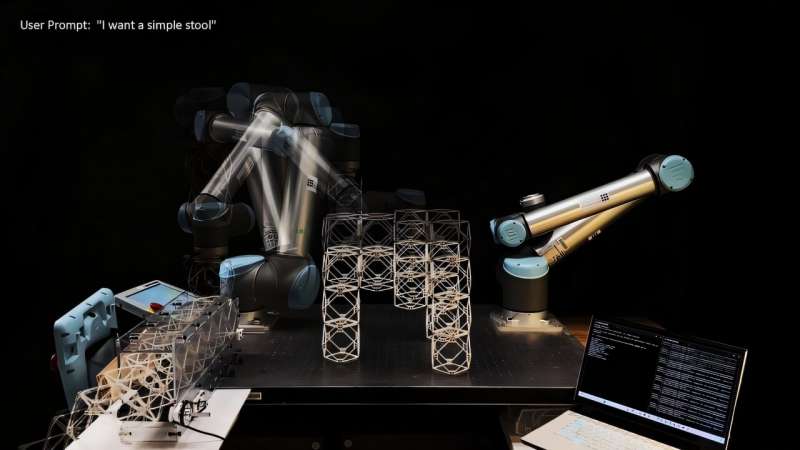

A new MIT system is pushing fabrication toward a future where objects materialize on demand with no CAD skills, no robot programming, and no long 3D-printing waits. In tests, researchers demonstrated a speech-to-reality workflow where a user can simply say, “I want a simple stool,” and a robotic arm assembles one in minutes from modular components.

Developed at MIT’s Center for Bits and Atoms, the platform fuses natural-language processing, 3D generative AI, and automated robotic assembly into a single loop. The goal: turn everyday speech into manufacturable geometry that a robot can immediately build. Early demonstrations include stools, shelves, side tables, chairs, and even playful forms like a blocky dog figure.

The system starts by converting spoken input into text and parsing intent through a large language model. A 3D generative model then outputs a mesh representation of the described object. Next, a voxelization engine decomposes the mesh into modular units that can be physically assembled.A geometric-reasoning layer refines the design, enforcing real-world constraints such as load paths, overhang limits, module count, and connectivity. Finally, a planning algorithm determines a collision-free assembly sequence and generates motion paths for a tabletop robotic arm.

The entire pipelinefrom spoken command to completed objectcan take under five minutes, making it dramatically faster than 3D printing for similar scales. Researchers say the system is an accessibility breakthrough as well. Instead of learning modeling software or robotic scripting, users rely on natural speech. Because objects are built from reusable modules, the workflow also promises near-zero-waste fabrication: a stool can be disassembled and remade into a shelf or lamp within minutes.

The team plans to reinforce the load capacity of furniture by replacing current magnetic connectors with stronger mechanical joints. They’re also exploring multi-robot assembly pipelines and mobile robots capable of building large-scale structures using the same voxel-based framework. Upcoming versions may combine voice with gesture input and augmented-reality guidance, bringing the interface closer to sci-fi “replicator” interaction models. Ultimately, the researchers envision a future where physical objects are as fluid and reconfigurable as digital files, generated and regenerated on demand.