Humanoid robots struggle with new tasks. A new approach could help them handle objects reliably in homes, factories, and service settings.

Humanoid Robots Learning to Handle ObjectsHumanoid robots could handle many tasks done by people. These tasks include household chores like cleaning, organizing, and cooking, as well as moving items or assembling products. To perform these tasks on their own, humanoid robots need to manipulate objects in different situations. Most current machine learning models for robot manipulation work well in environments similar to their training but struggle in new situations.

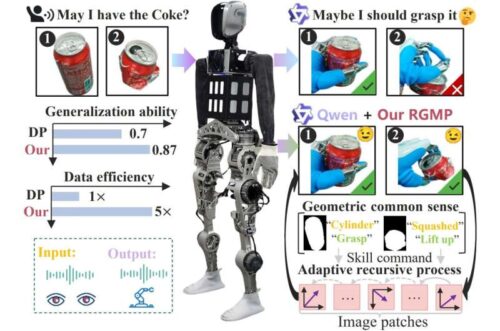

Researchers at Wuhan University created RGMP (recurrent geometric prior multimodal policy), a framework to improve humanoid robots’ object manipulation. RGMP could help robots grasp more objects and complete more tasks reliably.

The machine learning framework combines geometric semantic reasoning with visuo motor control for humanoid robots. The framework aims to improve robot adaptability, enabling reliable object manipulation across settings even when trained on small datasets, while using context information.

The framework has two main components: a geometric prior skill selector (GSS) and an adaptive recursive Gaussian network (ARGN). The GSS integrates geometric priors into a vision language model to help the robot select skills based on object shape and position. The ARGN supports motion synthesis by recursively modeling spatial relationships between the robot and objects it interacts with. Together, these components enable task execution with minimal data, addressing challenges with sparse training demonstrations.

The framework was tested on a humanoid robot and a dual arm desktop robot in experiments. Results showed that the robots could manipulate different objects in most scenarios. The framework showed generalization, achieving high success rates and improved data efficiency compared with state of the art models.

This approach could support automation in tasks where robots need to adapt to new environments without additional training. Potential applications include household chores, service delivery, and manual manufacturing processes. Future work will focus on improving generalization across tasks and enabling robots to infer action trajectories for new objects with minimal human input, reducing the need for teaching in dynamic environments.