High-performance computing gets a power-efficient boost as analog operations inside memory arrays cut data transfer overhead and energy consumption.

Energy efficiency in high-performance computing is increasingly constrained by the need to transfer large volumes of data between memory and processors, leading to high power consumption and computational latency. Conventional digital architectures struggle to maintain speed and energy efficiency for large scale, complex calculations.

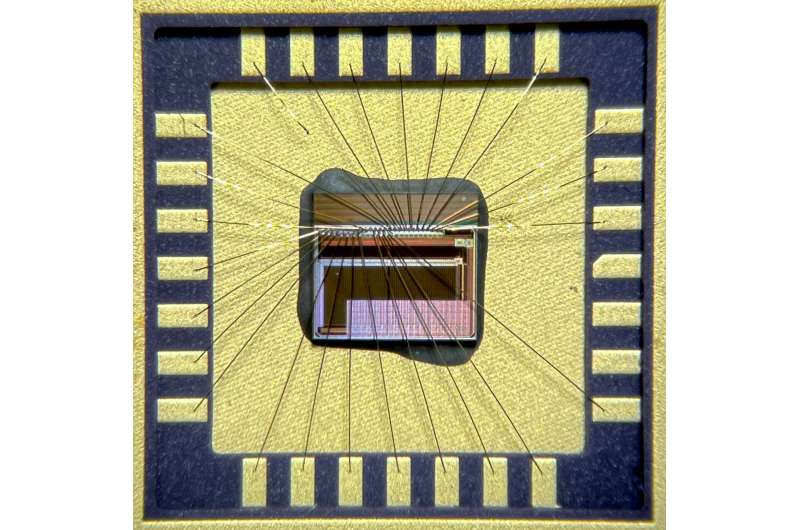

Researchers at the Politecnico di Milano have developed an analog in-memory computing chip that performs computations directly within memory arrays. The device demonstrates high accuracy while significantly reducing energy use and processing time compared with standard digital systems.

The chip integrates two 64×64 arrays of SRAM cells combined with programmable resistors to encode different resistance levels. Analog operations are conducted within the memory structure using operational amplifiers and analog-to-digital converters, eliminating the need to move data to an external processor. This architecture allows rapid solution of linear and non-linear equations while minimizing power consumption and silicon footprint.

Key features of the chip include:

- Fully integrated analog in-memory computing architecture

- Two 64×64 SRAM based arrays with programmable resistive memory

- CMOS compatible fabrication for silicon integration

- Analog processing via operational amplifiers and analog-to-digital converters

- Reduced power consumption and computing latency

- Compact footprint suitable for high-performance applications

Professor Daniele Ielmini, who led the research, says, “The integrated chip demonstrates the feasibility on an industrial scale of a revolutionary concept such as analog computation in memory. We are already working on putting this innovation into use in real-world applications to reduce the energy costs of computation, especially in the field of artificial intelligence.”