The FPGA combines HBM with DDR5 and LPDDR5 support, enabling memory bandwidth and efficiency for AI, networking, video processing, and test equipment applications.

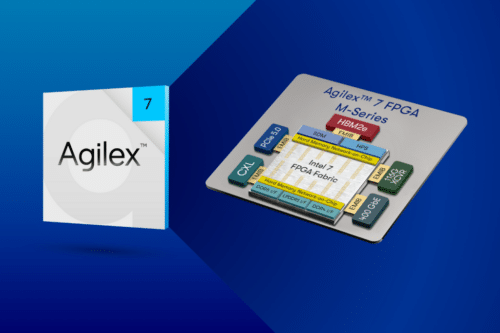

Altera Corporation has announced the production release of its Agilex 7 FPGA M-Series, the industry’s first high-performance FPGA to combine integrated high bandwidth memory (HBM) with support for DDR5 and LPDDR5 technologies. With more than 3.8 million logic elements, the M-Series is designed for applications that demand high performance and memory throughput, such as AI, cloud data centers, next-generation firewalls, 5G infrastructure, and 8K broadcast systems.

As workloads from AI, cloud computing, and video streaming continue to grow, so does the need for greater memory bandwidth, capacity, and energy efficiency. The Agilex 7 FPGA M-Series addresses these needs with a high-density logic fabric, fast memory interfaces, and reduced latency—all helping to eliminate bottlenecks and improve system performance.

A key innovation is its hardened memory Network-on-Chip (NoC) interface, which enables up to 1 TBps of memory bandwidth using in-package HBM2E and integrated DDR5/LPDDR5 memory controllers. Built on the second-generation Hyperflex architecture, the M-Series delivers over 2x the fabric performance-per-watt compared to other 7nm FPGAs.

The FPGA M-Series is used in several performance-focused applications. In data centers, it processes generative AI models using memory bandwidth and FPGA fabric. In networking, it offers memory buffers and data paths for firewalls and network appliances. For broadcasting, it transfers data between image sensors and FPGA logic to support 8K video. In test and measurement, it is used to develop 800 GbE systems with Ethernet testing capabilities.

Thomas Sohmers, CTO & Founder of Positron, said: “Agilex 7 M-series enables Positron to utilize a memory-optimized architecture, achieving over 93% bandwidth utilization—far surpassing the typical 10-30% seen in GPU-based systems. This advancement allows us to deliver 3.5 times better performance per dollar and 3.5 times greater power efficiency compared to leading GPUs when running LLM inference workloads such as the Llama3 family and MOE-based reasoning models.”

For more information, click here.