The AI processor delivers inferences at ~1ms and has latency up to 100x than other solutions, suitable for robotics and industrial automation

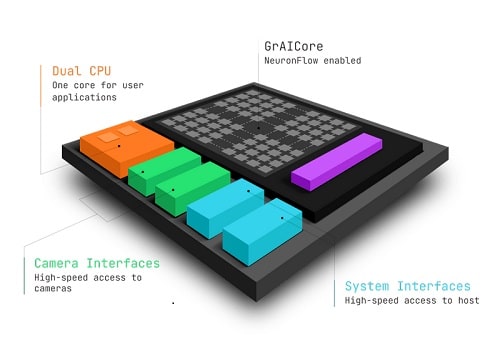

GrAI Matter Labs, a developer of brain-inspired ultra-low latency computing has introduced the GrAI VIP, Vision Inference Processor, a full-stack AI system-on-chip platform (SoC) to partners and customers.

The GrAI VIP platform will drive a significant step in fast responsiveness for visual inference capabilities in robotics, industrial automation, AR/VR, and surveillance products and markets.

The NeuronFlow event-based dataflow compute technology in GrAI VIP helps deliver Resnet-50 inferences at ~1ms and enables industry-leading inference latency up to 100x than other solutions.

“GrAI VIP will deliver significant performance improvements to industrial automation and revolutionise systems such as pick and place robots, cobots and warehouse robots,” said Ingolf Held, CEO of GrAI Matter Labs.

“The vision market AI inferencing is the most active segment of the AI chip market,” said Michael Azoff, Chief Analyst, Kisaco Research. “It exploits sparsity (spatial and temporal) in the input data.”

The SoC allows the processing of only the information that optimises energy and maximises efficiency, saving time, money and vital natural resources.

AI application developers looking for lightning-fast responses for their edge algorithms can now get early access to the GrAI VIP Vision Inference Processor platform and innovate game-changing products in industrial automation, robotics and more.