In disaster zones, every second counts. What if a robot could map hazardous spaces in real-time, converting hundreds of images into an accurate 3D map instantly?

Robots navigating disaster zones, such as partially collapsed mines, must quickly map their surroundings and determine their exact location to safely locate survivors. Existing machine-learning systems can perform this task using only onboard cameras, but they typically handle only a small number of images at a time. In real emergencies, robots must cover large areas and process thousands of images rapidly.

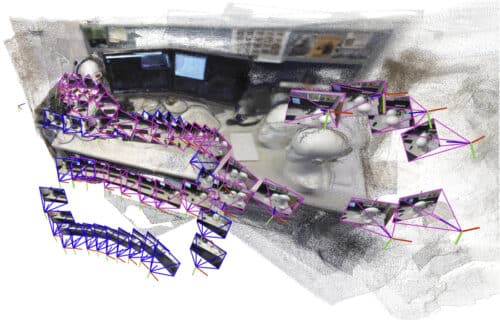

Researchers at MIT created a system to overcome this limitation. It generates small 3D submaps and stitches them together to form a complete map, continuously tracking the robot’s position. Unlike previous methods, it does not require calibrated cameras or expert tuning. This system operates faster, simplifies processes, and scales easily. Flexible mathematical transformations correct small distortions in each submap, ensuring accurate alignment and reducing errors to under five centimeters.

The system produces high-quality 3D reconstructions in near real-time, even from short cell phone videos, and enables robots to localize themselves in complex, crowded environments such as office corridors or industrial warehouses. Beyond search-and-rescue, it could also support VR and extended reality applications or help robots navigate warehouses efficiently.

Traditional robotic navigation relies on simultaneous localization and mapping (SLAM), where a robot simultaneously maps its environment and tracks its position. Conventional SLAM methods often fail in complex scenes or require pre-calibrated cameras. Machine-learning models improve performance but remain limited in the number of images they can handle, restricting their usefulness for large-scale or time-critical tasks.

By breaking the scene into submaps and applying AI-enhanced alignment techniques, the system efficiently processes large numbers of images, generating accurate maps and camera positions in real-time. Future work will focus on improving reliability in extremely complex scenes and deploying the system on robots operating in challenging, real-world environments.