Robots are very different from humans as they can not move like humans. Not anymore. Read on to learn more!

Humanoid robots struggle with one issue: they cannot move as humans do. Most robots today rely on small controllers that handle only one behaviour at a time, like walking, climbing, or crouching. Each of these needs careful tuning and custom reward engineering, making them hard to scale and nearly impossible to generalise. This slows progress for robotics companies, factory automation teams, research labs, and anyone trying to build robots that can adapt to the physical world.

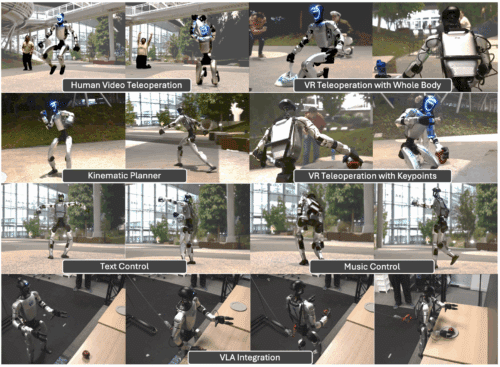

NVIDIA’s researchers created a system, SONIC, to solve this by showing that whole-body control can emerge by scaling model size, training data, and computation. Instead of creating many narrow controllers, SONIC uses motion-tracking as a single foundation task. The idea is simple: if a robot can track and mimic large amounts of human motion, it gains a base capability that can be reused for different actions. This matters for teams building general-purpose humanoids, teleoperation systems that need real-time tracking, and developers who want robots to respond to high-level input without hand-building behaviour libraries.

The researchers trained SONIC using more than 100 million motion-capture frames—about 700 hours of humans running, bending, crawling, turning, and recovering from stumbles. They scaled the model from roughly one million parameters to more than forty million and trained it using around 9,000 GPU-hours. A kinematic planner connects the system to real-world use cases, allowing the same controller to follow a VR operator, react to video keypoints, or respond to high-level cues such as text or music.

For robotics engineers, the benefit is a single policy that generalises instead of breaking when the scenario changes. It can handle unseen movements, adapt to different body shapes, and transfer from simulation to a physical humanoid without extra training. This reduces the cost and time required to get a robot operational and expands what one robot can do without redesigning its control stack.

The work does not remove remaining challenges, as robots still need perception, physical interaction, and hardware. It shifts the control problem from handcrafted rules to scalable, data-driven learning. SONIC’s contribution may be showing that whole-body humanoid control behaves like other AI domains, where scaling is not just helpful but transformative.