Columbia University researchers created a system that captures both visual and touch data. The tactile sensor can be added to robot hands, improving their ability to handle objects.

To help humans with household chores and other daily tasks, robots must be capable of effectively handling objects with diverse compositions, shapes, and sizes. Over recent years, advancements in manipulation skills have been driven by the development of more advanced cameras and tactile sensors.

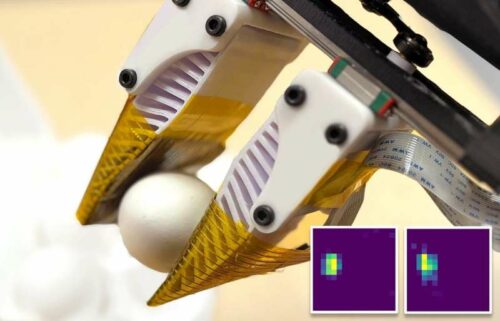

Researchers at Columbia University have introduced a new system that simultaneously captures visual and tactile information. Their innovative tactile sensor could be integrated into robotic grippers and hands, enhancing the manipulation capabilities of robots with different body structures.

As part of their research, the scientists aimed to create a multi-modal sensing system capable of capturing visual data—used to estimate the position and geometry of objects within its view—and tactile information, including contact location, force, and local interaction patterns.

The integrated system they developed, known as 3D-ViTac, combines both visual and tactile sensing with learning, offering robots enhanced capabilities to handle real-world manipulation tasks more effectively.

The team tested their sensor and the end-to-end imitation learning framework they developed through a series of experiments using a real robotic system. They specifically attached two sheet-like sensing devices to each fin-like hand of the robotic gripper.

The team then evaluated the gripper’s performance on four challenging manipulation tasks: steaming an egg, placing grapes on a plate, grasping a hex key, and serving a sandwich. The results of these initial tests were highly promising, as the sensor significantly enhanced the gripper’s ability to complete all tasks successfully.

The sensor developed by the research team could soon be deployed on other robotic systems and tested in a broader variety of object manipulation tasks that demand high precision. The team plans to create simulation methods and integration strategies to simplify the application and testing of their sensor on additional robots.

Reference: Binghao Huang et al, 3D-ViTac: Learning Fine-Grained Manipulation with Visuo-Tactile Sensing, arXiv (2024). DOI: 10.48550/arxiv.2410.24091