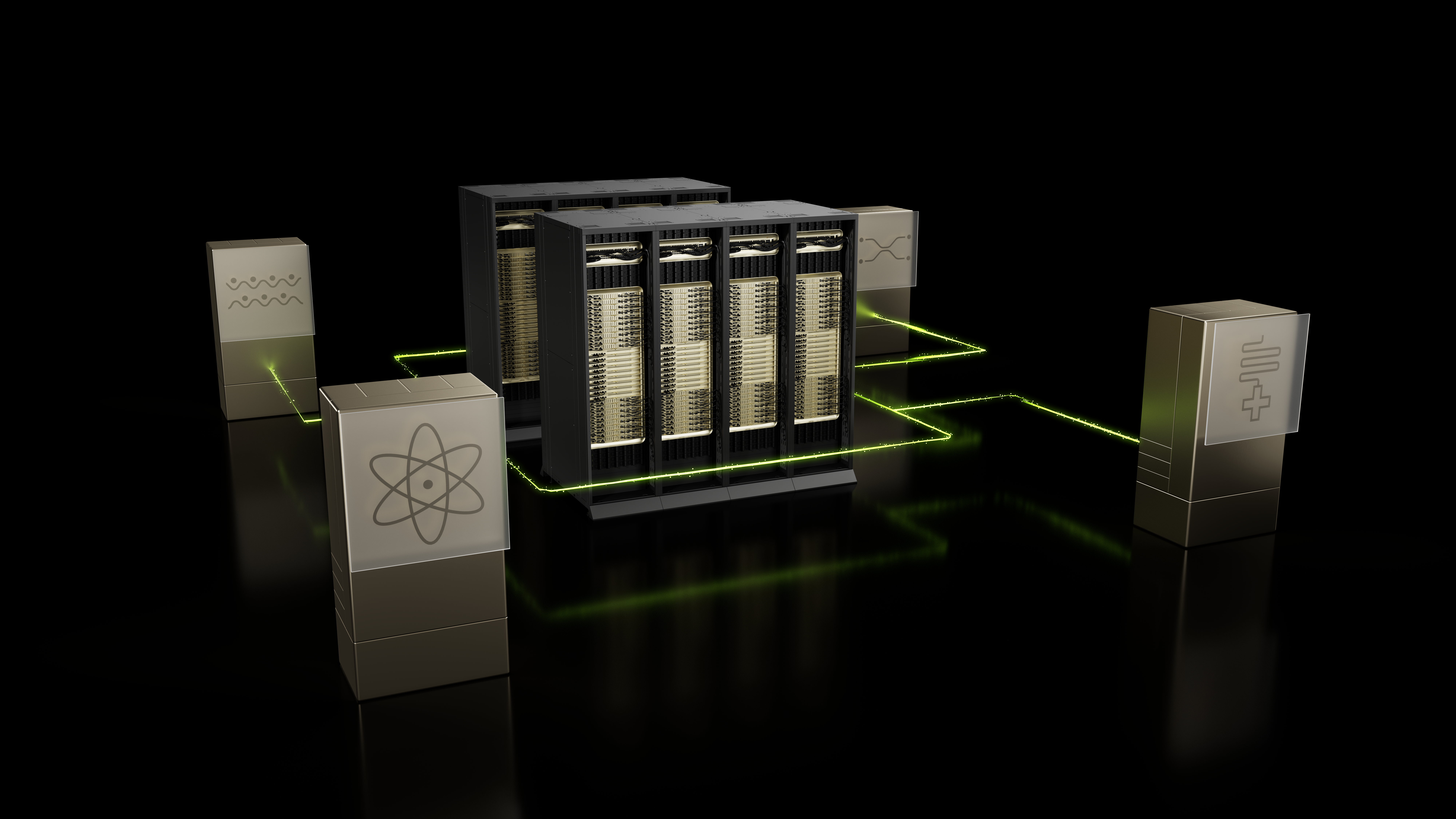

GPU Parallel processing makes faster decoding of qubits, more efficient circuit layouts, and realistic simulations of quantum devices.

Quantum computing progress is constrained by three major technical challenges. Noise in quantum hardware (error correction), the optimisation of circuits (circuit compilation), and the simulation of qubit designs remain critical hurdles. These are computationally intensive tasks that classical systems alone struggle to process efficiently.

Super computing is now being applied to these areas. With Supercomputers and parallel GPU processing, researchers are scaling error correction, compilation, and simulation workloads that would otherwise be limited by conventional methods. NVIDIA’s CUDA-X libraries provide the base infrastructure for this work.

Error correction is one example. A single qubit is fragile, so thousands must be combined into logical qubits through rapid decoding. This decoding is mathematically complex and must run in real time. By using GPU acceleration, researchers have doubled the speed and accuracy of these decoders. Some teams have gone further with AI models that learn the heavy computations in advance, cutting decoding times by up to fifty times.

Circuit compilation is another hurdle. Every algorithm must be matched to the physical qubits on a chip. This is a graph problem that grows quickly in complexity. With GPU-powered methods that build layouts from repeated patterns, compilation speeds have improved by as much as six hundred times, making it easier to run circuits on today’s devices.

Simulation is the third piece. To design better qubits, scientists need to model how they behave with other components like resonators. GPU-accelerated simulation toolkits now allow researchers to run models thousands of times faster than before, making large-scale studies practical.