A new two-layer language-model algorithm is helping robots break down tasks, refine actions on the fly, and execute movements with greater human-like reasoning, showing strong gains in both simulated chores and real-world robotic arm tests.

A research team at NYU Tandon has unveiled Brain Body-LLM, a two-part language-model algorithm designed to help robots plan tasks and execute movements with greater human-like reasoning. The work, published in Advanced Robotics Research, positions LLM-driven control as a potential bridge between high-level task planning and low-level motor executionan area where traditional robotics often struggles.

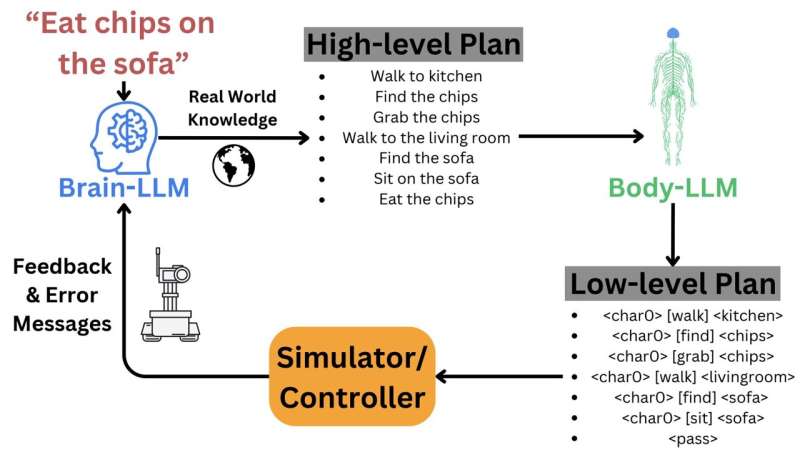

At the core of the system are two coordinated models. The Brain-LLM interprets a user’s instruction and decomposes it into sequential, actionable steps. The Body-LLM then maps each step to robot-compatible commands, flagging gaps when no direct action is available.

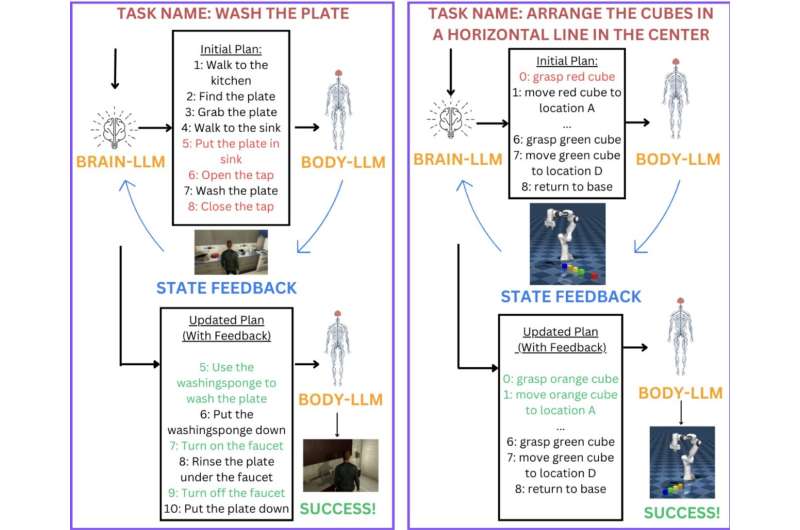

A closed-loop feedback layer monitors execution in real time, feeding environmental cues and error signals back into the system to refine subsequent actions. The team tested the setup in both virtual and physical environments. In the Virtual Home simulator, a digital robot completed household tasks, while the physical evaluation used a Franka Research 3 robotic arm. Across scenarios, Brain Body-LLM improved task-completion rates by 17% over comparable systems, achieving an average success rate of 84% in real-world trials. The algorithm also demonstrated the ability to iteratively refine plans as conditions changed—one of the more challenging requirements in embodied AI.

Researchers say the model’s strength lies in its dynamic interplay between planning and control, mirroring how humans update actions based on context. By giving the LLM controlled access to a fixed set of robot commands, the team aimed to evaluate how well general-purpose language models can reason about physical tasks without risking uncontrolled behavior during early testing.

The group now plans to extend the framework with additional sensory inputs, including 3D vision, depth data, and joint-level control, in an effort to further shrink the gap between robotic and human motor intelligence. The work adds momentum to a growing shift in robotics, where LLM-based agentic systems are increasingly being used to coordinate complex task sequences and integrate external tools. If successful, the approach could support more adaptable home robots, safer industrial manipulators, and more intuitive human-robot collaboration systems applications that demand flexible reasoning as much as mechanical precision.