Sending very fast data can lose signal and use too much power. A new receiver can keep signals strong, uses less energy, and works faster for data centers.

28nm CMOS

High-speed data systems have two big problems. First, sending PAM-8 signals above 100 Gb/s can lose signal quality. Second, high-speed signals get weaker as they travel, and receivers often use too much power to fix this. Normal designs struggle to keep signals strong and efficient.

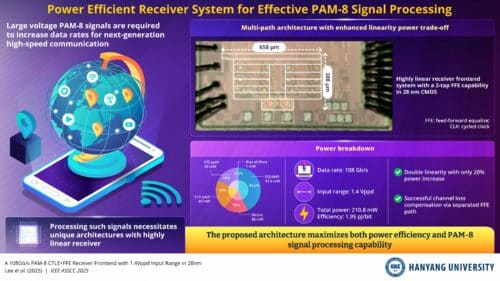

To solve these issues, Hanyang University researchers proposed a 108 Gb/s PAM-8 receiver frontend system in 28nm CMOS. The system is designed for data centers, AI clusters, networking equipment, the metaverse, and supercomputers, where faster, energy-efficient data transfer is essential.

Effective PAM-8 signal processing requires a highly linear receiver. High-voltage, higher-order PAM-8 signals demand strong linearity to maintain signal-to-noise ratios. The research team, led by M.S./Ph.D. student Sangwan Lee and Associate Professor Jaeduk Han, addressed this with a multi-path architecture that improves the linearity-power trade-off.

The multi-path architecture divides the signal path so each path handles a sub-range of the total dynamic range. This reduces the number of required slicers or samplers, lowering the load on the final stage and doubling linearity with only a 20% increase in power.

Channel loss is addressed with a separate FFE path. Instead of processing large input signals directly, which can cause compression, the FFE calculates compensation values from a small, attenuated signal. This ensures accurate channel loss compensation even at high voltages and high speeds.

The receiver frontend system, implemented in 28nm CMOS with a two-tap FFE, achieves 108 Gb/s, an input range of 1.4 Vppd, total power of 210.8 mW, and energy efficiency of 1.95 pJ/bit.

This technology can be applied immediately as a core component in next-generation high-speed data communication infrastructure. It benefits data centers and AI clusters by speeding up server communication for large AI model training and massive dataset processing. It also supports future 800G and 1.6T Ethernet networking equipment and accelerates supercomputers used for advanced scientific research and simulations.