Cut AI costs, enable bigger context, process more tokens. A system shifts how memory works with GPUs. Want to know more?

Enfabrica Corporation has announced the launch of its Elastic Memory Fabric System (EMFASYS), a hardware-software platform that reduces AI inference costs by up to 50 percent. The system helps AI providers scale generative and reasoning-driven workloads by offloading high-bandwidth memory (HBM) to DRAM while maintaining predictable latency.

Generative AI workloads now require 10 to 100 times more compute per query than earlier LLM deployments and generate billions of inference calls daily. EMFASYS addresses this by balancing token generation across servers, reducing idle GPU cycles, and enabling larger context windows and higher token volumes at lower cost.

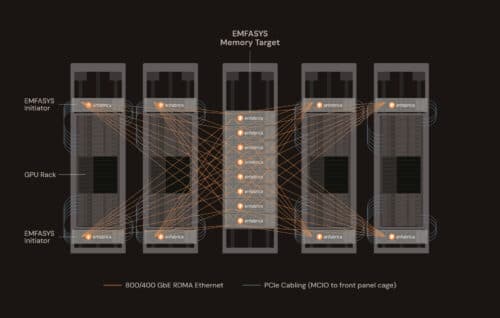

At its core, EMFASYS is powered by Enfabrica’s 3.2 Terabits/second (Tbps) Accelerated Compute Fabric SuperNIC (ACF-S). The ACF-S connects up to 144 CXL memory lanes to 400/800 Gigabit Ethernet (GbE) ports, supporting shared memory pools of up to 18 TeraBytes (TB) of CXL DDR5 DRAM per node. The system aggregates memory bandwidth across channels and ports with microsecond-level read access times, using a caching hierarchy that hides latency within inference pipelines.

Compared to flash-based inference storage, EMFASYS delivers lower latency and supports unlimited write/erase cycles. Cloud operators can deploy parallel “Ethernet memory controllers” populated with pooled DRAM, avoiding the cost of expanding GPU HBM and CPU DRAM for each AI server.

The ACF-S provides multi-port 800 GbE connectivity to GPU servers with 4x the I/O bandwidth, radix, and multipath resiliency of GPU-attached NICs. It enables zero-copy data placement across 4- or 8-GPU complexes and more than 18 channels of CXL DDR memory. EMFASYS builds on these capabilities with RDMA-over-Ethernet networking, on-chip memory engines, and a remote memory software stack based on Infiniband Verbs.

“AI Inference has a memory bandwidth-scaling problem and a memory margin-stacking problem,” said Rochan Sankar, CEO of Enfabrica. “As inference gets more agentic versus conversational, more retentive versus forgetful, the current ways of scaling memory access won’t hold. We built EMFASYS to create a rack-scale AI memory fabric and solve these challenges. Customers are partnering with us to build a scalable memory movement architecture for their GenAI workloads and drive better token economics.”