Chinese researchers harness probabilistic updates on memristor hardware to slash AI training energy use by orders of magnitude, paving the way for ultra-efficient electronics.

Chinese scientists have unveiled a breakthrough in training energy-hungry AI models using memristor hardware, reducing energy consumption by almost six orders of magnitude compared with conventional GPU-based training. Their new approach tackles a long-standing hardware-software mismatch challenge in memristor-based neural networks and could reshape energy profiles for next-generation AI electronics.

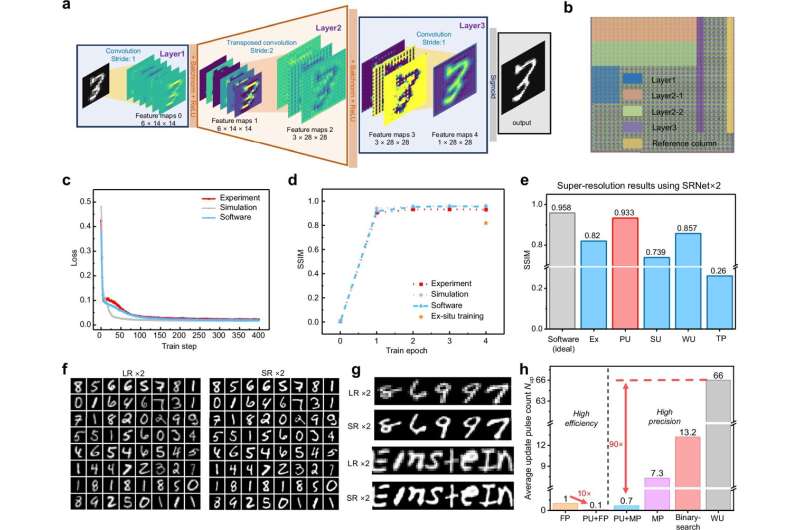

At the core of the advance is an error-aware probabilistic update (EaPU) algorithm that aligns the noisy, unpredictable behavior of memristor devices with the gradient-based weight updates used in neural network training. Traditional backpropagation makes small, precise adjustments to model weights, but memristorsanalog devices that combine storage and processing suffer from write noise and drift that swamp such fine-grained changes. Instead of battling that noise, the EaPU strategy embraces stochasticity: it probabilistically applies larger weight shifts while skipping updates below the device’s noise threshold, cutting the number of writes by over 99% and dramatically reducing energy use.

The team validated their method on an experimental 180 nm memristor array, training networks for image denoising and super-resolution with quality on par with or better than conventional training methods, but using only a tiny fraction of the energy. Larger networksincluding 152-layer ResNets and Vision Transformerswere tested in simulation, showing accuracy gains exceeding 60% on noisy hardware compared to standard approaches.

Beyond energy savings, the reduced update frequency extends device lifetime by roughly 1,000×, alleviating a key hurdle for commercial memristor systems. Compared with prior memristor training schemes, EaPU cuts training energy roughly 50×, and about 13× versus the best existing optimized algorithm.

Researchers see broad applications for their technique, potentially extending it to other memory technologies like ferroelectric transistors and magnetoresistive RAM, and even to large-scale AI training clusters where energy costs are a strategic concern. If scaled and commercialized, this work could help make sustainable, energy-efficient AI electronics a practical reality.